Journal of Tumor Research

Open Access

ISSN: 2684-1258

ISSN: 2684-1258

Research Article - (2023)Volume 9, Issue 3

Purpose: Breast cancer has caused more deaths in women compared to any other cancer that might be found among women. With that being said, this research has proposed a method which can detect classify and segment the different types of breast tumors. This paper has also discussed the different methods by which the breast cancer has been classified and segmented in the past.

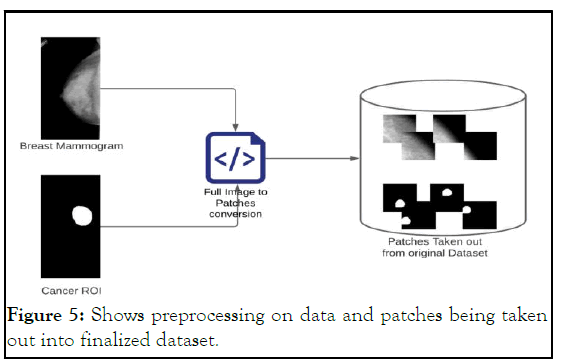

Method: Breast cancer can be detected in its early stages by MRI and/or mammography of the breast muscles. For this research a novel approach is proposed for breast cancer detection, classification and segmentation. The proposed framework uses breast mammograms from the CBIS-DDSM (Curated Breast Imaging Subset of DDSM) DICOM images. Mammograms are radio images of a muscle. The DICOM data has been preprocessed in such a way that it could be incorporated with more traditional format, and then the patches from the mammogram images have been taken out and finally fed into the mask RCNN neural network.

Results: The outcome of the approach is, that the proposed framework is able to localize cancer tumor, even when it has developed in multiple regions, making it a multi-class classifier. The framework is also able to classify whether the tumor is benign or malignant as well as segments the cancerous tumor region with a pixel wise annotation. The average accuracy observed is about 85% on test cases, with precision value of 0.75, recall being 0.8 and F1 score 0.825.

Conclusion: The proposed framework is cost efficient and can be used as a helping tool for the radiologist in breast cancer detection. In future the proposed approach can also be implemented on other cancerous tumors for classification and segmentation purposes.

Breast tumor classification; Mask RCNN; Breast cancer; Mammogram

Over the years breast cancer has become one of the leading causes of death due to cancer among the women population of the world, even though it has affected men, but the major victims of this type of cancer are mainly women. According to Nounou, et al. there were 18.1 million new cancer cases and 9.6 million cancer deaths in 2018 alone, these numbers represent both sexes hence [1,2]. In both the sexes combined, lung cancer was diagnosed to almost 11.6% of all the total cases that were reported back in 2018, and almost 18.4% died due to this type of cancer, second most leading cause of death due to cancer is female breast cancer which is placed second most harmful cancer to humans with about 11.6% of overall deaths due to it, making it the leading cancer type which kill women yearly. Another older research shows that nearly 1.7 million breast cancer cases were reported back in 2012 and out of those 1.7 million cases almost 521,900 died [3,4]. They also state that breast cancer causes about 15% of all the cancer related deaths among women. With that being said, breast cancer does not limit itself to female gender, males are also a victim to this sort of cancer, though the cases reported every year are nowhere near to that of women cases and deaths. The breast cancer cases accounts for 0.8%-1% among men, the scope of this research is only limited to breast cancer among women [5,6]. We can see from recent data analysis on breast cancer among women in US, that the number are on a rise, the deaths have come to a halt and in some cases, they have reduced but the reported cases have not yet been controlled or lowered even in the recent times [7]. This research is here to support the early screening and diagnosis of breast cancer through mammograms. The early detection and classification of breast cancer is the only way to suppress cancer using artificial intelligence and more specifically deep learning, there might be ways to develop many medicines that could become an outbreak for cancer treatment, but for now the early screening, classification and segmentation of breast cancer could contribute wonders towards a bigger picture of CAD (Computer Aided Diagnosis). Breast cancer could be screened and/or diagnosed by a number of ways, among those numerous ways to diagnose a breast cancer patient; two stand out, MRI and mammography. Depending on the doctor, usually the doctors after some physical exam suggest the patient to get MRI or mammogram of their breast muscles for a clearer picture of what’s going on inside the muscles. As mentioned in their research, that breast cancer can be early diagnosed by MRI and mammography, and besides MRI, mammography is the fastest medical way to diagnose breast cancer, it is a 20-minute procedure and not just that it is one of the safest methods of diagnosis compared to any other treatment. According to these past researches we can also say that mammogram is the only diagnostic method which has results in reducing the chances of deaths due to cancer, since it has played such a key role in the early detection of breast cancer. Even though some researches such as might argue that histopathological imaging of breast cancer would provide more accurate results and that doctor’s approach towards cancer diagnosis should be histopathology, but in our defense, we can say that the amount of time and money which histopathology takes is enormous compared to what mammography takes, in addition to that mammography is a harmless procedure. Ever since the advancements in the field of artificial intelligence especially in the field of deep learning, we have seen an increase in the number of medical images analysis based researches. Machine learning algorithms have opened the doors that the researchers didn’t know existed in the past. Due to that there have been multiple researches in the past which have incorporated the machine learning techniques in order to detect, classify and segment breast cancer tumor in a breast mammogram image. In the researchers found that since 2010 and even before that people from different regions of the world have been working to automate the process of screening and diagnosing breast cancer tumors, they have mentioned numerous researches where the people had incorporated different machine learning algorithms to solve the common task at hand which is breast cancer being diagnosed by a computer aided technology, we can very simply say that machine learning algorithms are not made to process image data and that deep learning algorithms completely out perform them when it comes to any form of image based modeling. With that being said, machine leaning algorithms mentioned in mostly achieved accuracy which could be easily be outperform a radiologist. But many deep learning researches say otherwise. In the researcher was able to obtain the data of about 800 breast cancer patients who had underwent a biopsy, with this data he implemented a confusion matrix and ROC analysis, he was able to obtain 90.5% accurate results, classifying the given data into benign, malignant or normal. Another research using artificial neural network and mammogram images trained a Probabilistic Neural Network (PNN) which was able to classify the breast tumor with over 80% accuracy; they have also mentioned other methods to segment and make a bounding box using Fuzzy C-Means (FCM) and Discrete Wavelet Transform (DWT). But simple artificial neural networks are not made for the image based data, in fact there’s an algorithm called convolution neural network which out performs any other type of algorithm when it comes to image analysis, classification, segmentation and much more. CNN has been incorporated by numerous researches for solving the breast cancer classification and segmentation. Recently due to the advancements in deep learning, specifically in the convolution neural network area, we can see a growing number of researches that revolve around the medical image analysis as we can see from these researches, most of these researches are related to the classification of some disease using convolution neural networks and some other deep learning algorithms. Moving towards more specific use of convolution neural network in detection and classification of breast cancer using mammograms and MRI images. In the group of researchers have taken a small dataset from. From mini-MIAS (Mammographic Image Analysis Society digital mammogram database) database they obtained some mammogram cases of patients, since the mammogram images of breast are quite large and bigger dimension of images would mean more time for training a model, hence they made patches out of the mammogram images randomly and then they fed it to a CNN architecture which they had customized, according to their results, these researchers got over an accuracy of 85% this would mean that once given a breast mammogram the neural network would with 85% confidence predict it as normal, benign or malignant.

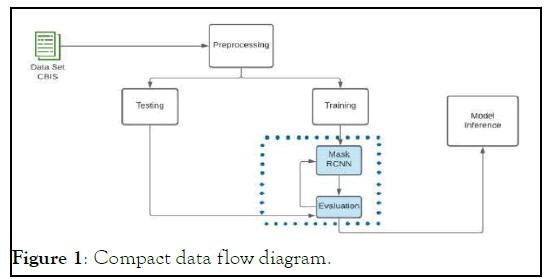

In another research which basically revolved around the use of MRI breast images which were pre-process into taking out the smaller patches of those MRI images, not just some random regions from the MRI images, but the regions which had the tumorous region from the MRI images, then each image was resized to 50 × 50 dimension in order to normalize their values into range of 0 and 1. According to their proposed CNN architecture was able to outperform almost all the previous CNN based models that were using whether DDSM (Digital Database for Screening Mammography) dataset or the MIAS dataset, they got an accuracy of almost 98%. Another group of researchers made a new architecture using the convolution neural network just for the sake of medical image analysis and named it U-Net as it has a U-shaped structure. Using the U-net architecture many researches have been done for the medical image analysis, taking this research for instance, these researchers have taken a modified form of U-net and they have called it a dense-U-net, with this neural network they then classified and segmented the breast cancer on mammogram images which they had taken from the DDSM, they were able to achieve an AUC score of 83.36%. Another research done under the U-Net architecture was where they implemented simple UNet in order to classify and segment the breast cancer using MRI based images of breast. They were able to achieve an accuracy of 97.44%. As for our approach in order to solve the task at hand, we have implemented a mask RCNN in order to detect, classify and segment the mammogram images of breast. Our research was directly inspired from because they have also detected, classified and segmented the breast tumor but they have done all of this using MRI images and their preprocessing and training techniques were quite different from ours, in this research we have implemented Mask R-CNN but rather than feeding it images in the traditional way we took the patches out of the given mammogram data this way the training was speedy compared to what time mask RCNN usually takes when it comes to training a network and not just that but due to us taking out the patches from the mammogram, we were able gather a dataset of almost 1.5 million images which is immensely bigger than any other dataset that has been used in the past, more discussion on the mask RCNN in proposed methodology section. Below Figure 1 shows the overall steps of this research (Figure 1).

Figure 1: Compact data flow diagram.

Proposed methodology

Breast cancer detection, classification and segmentation have been done before on mammograms, MRI’s and other methods of screening cancer as mentioned above. Since this was not a new task to be done hence, we had to introduce a new and more efficient method in order to achieve similar or better results while still having an edge of our proposed method being more efficient than the ones that have been implemented before us. In order to make our approach stand out from all the other methods that have been applied before we selected the mask RCNN neural network which is particularly used for instance segmentation, along with localization and classification. Mask RCNN has been used for medical image analysis in the past, take the example of this research where the breast cancer was being segmented using the mask RCNN but on MRI images, in another research we can see that on a microscopic level the mask RCNN was being employed to segment the nucleus of different cells. Mask RCNN has also been used to classify and segment different types of cancer among which stands out the most, since in this research they have classified and segmented prostate cancer using the MRI images [8-10].

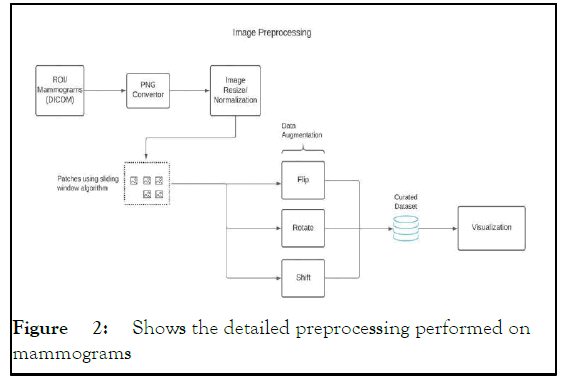

Mask RCNN has also been used to classify and segment different types of cancer among which stands out the most, since in this research they have classified and segmented prostate cancer using the MRI images. For our research we took the mammogram images from the CBIS-DDSM, the dataset consists of about 3100 images of breast mammogram, and alongside each mammogram we have the ROI (region of interest) where thecancer resides, one can say that the dataset is annotated in such a way that we can apply some simple image processing techniques such as the bitwise or operation so that we could apply the ROI onto a breast mammogram and see where the cancer is. The dataset is also classified into further two types of cancers one being calcification and other being mass, but since the scope of our research does not classify whether the detected cancer has calcified tumor development or mass tumor development inside the breast muscles, which is why we have taken all the mammograms and put them together while still having them in three classes which are benign, malignant and normal, further discussion on dataset will bet in subsection 2.1 Dataset. Figure 2 shows the pre-processing done on the mammogram dataset.

Figure 2: Shows the detailed preprocessing performed on mammograms

Dataset CBIS-DDSM

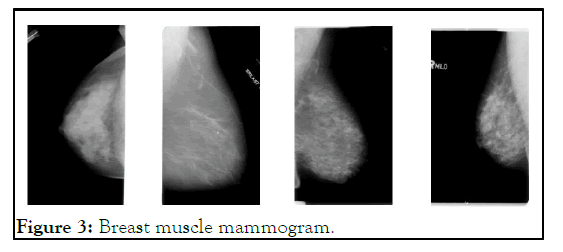

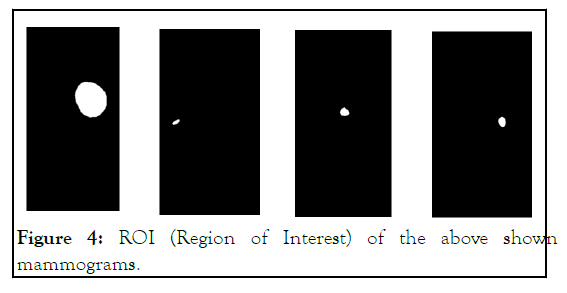

For our research we just did not want to classify some cancerous tumor, that is being done already pretty accurately since the past many years, what we wanted to achieve with the project was to detect the tumor in its early stages and for the breast cancer tumor detection and segmentation, the only thing that really caught the development of cancerous tumor in its nascent stages was a mammogram image, not just that, it has been researched that early detection of breast tumor through mammogram was able to reduce the fatalities among women. For this we selected the CBIS-DDSM (Curated Breast Imaging Subset of DDSM). The dataset has a total of 3100 mammograms while each mammogram has its own ROI (Region of Interest) corresponding to it [11-15]. An ROI here refers to the part of the mammogram where the cancerous tumor is concentrated as we can see in the given Figure 3, which shows an ROI corresponding to its breast mammogram. The given CBISDDSM dataset is in the DICOM format of images, DICOM is the standard image format when it comes to medical image and since the dataset was in DICOM we had to convert to into the usual PNG format which is usually more programmed oriented format. After the conversion of these images, we saw that the mammogram and ROI images were not at all uniformly sized, the images ranged in dimensions, for the sake of normalization and uniformity we resized all the dataset into almost 2750 × 1100. The dataset was classified into two parts, firstly we had breast muscles that had calcification formation in the breast tissue, while the other was mass being developed in the breast tissue, then inside calcification type of breast tissues we have benign tumor and malignant tumor, in the same manner under the mass breast tissues we have benign tumor and malignant tumor. But since our research was not going to classify the calcification and mass formation inside the breast tissue hence, we discarded this feature completely from the dataset and combined the rest of the cases into two different categories, first being benign breast cases and other being the malignant breast cases (Figure 4). This dataset was further divided into test and train datasets; hence at the end we had four different folders two for benign training and testing cases, while others were malignant cases for training and testing.

Figure 3: Breast muscle mammogram.

Figure 4: ROI (Region of Interest) of the above shown mammograms.

Pre-processing patches from mammogram

All the breast cancer related researches that have been done in the past particularly the ones that have employed mammogram images in them have used as much as 3000 to 4000 mammogram images in order to make a model we can see it in these researcher. Usually, we can see that convolution neural networks and normal neural networks require huge network was in the form of patches, with this image processing method they were able to segment the retinal blood vessels with more than 90% AUPRC (Area under the precision/Recall curve). In the researchers have made a breast cancer classification model using Convolution Neural Network (CNN), one of which has taken mammograms as data input and other has taken breast MRI images, both these researches fed the data in patches format. In the very same manner as we had seen from these datasets to start generalizing and in order for them to achieve high accuracies, with that keeping in our mind we started to look for methods through which we could increase our dataset, image augmentation was the only option for us since we do not have much of open source data for breast cancer mammogram. But soon we realized that even if we did that the size (dimension) of the mammograms is so enormous that it would take quite some GPU time, the mask RCNN neural network itself is an enormous sized neural network and it takes quite some time to train. It wasn’t viable to feed such huge images to the network and keep the neural network running for weeks. Another way was to resize the images into smaller dimension but, we noticed that the ROI (Region of Interest) was already very small, it was not more than 200 × 200 dimension at most, and if we were to downscale the image any further the ROI’s feature would completely disappear. But in the past we have seen researchers taking out the patches from full images and then performing some classification or segmentation on them, a few researchers that got us this idea were, in this paper the researchers have segmented the retinal blood vessel, they have incorporated classical transfer learning but the data of retinal blood vessel which was fed into the neural researches, we also implemented this, first we took the full mammogram and their corresponding ROI images, then we extracted patches from each of those mammograms along with their ROI and while we were taking patches out of each mammogram and ROI, we also augmented those patches by randomly flipping, laterally inverting and rotating them up to 30°. Preprocessing the data and then taking the patches out has been defined in detail in this research, where they explain how finding the tumor lesion out of a mammogram is same as finding” needle in a haystack” and how patches are an effective approach while dealing with segmentation and classification tasks. Once we processed the images and obtained the patches from those mammograms and their respective ROI’s it was time for training the network. As we can see in the Figure 5.

Figure 5: Shows preprocessing on data and patches being taken out into finalized dataset.

Training

We have trained this neural network using, Nvidia 3080 Ti GPU, the framework we used was Tensor Flow version 1.14.0, for image preprocessing we used the open CV library and since our dataset was almost 1.5 million images and we were sending 500 images per epoch stochastically hence it took us almost 2200 epoch for training this network which was about 1 minute each epoch making the whole training almost 36 hours of training, keeping the L.R (Learning Rate) at 0.001, we lowered the learning rate to 0.0001 after almost half way through the training using a callback, the rest of hyper parameters can be seen in table 1. We have used the matter port implementation of mask RCNN on Tensor Flow which currently open source on GitHub is.

Mask RCNN

Mask RCNN is the state of the art neural network which is used mainly for its capability of instance segmentation, but since the mask RCNN is an extension and improvement over the faster which is why it is also used for the localization and lastly for classification. Mostly mask RCNN would be seen with object detection of sorts but, since it has the ability to generalize on over such versatile datasets as mentioned in their original research, of ROI align for the object area. Once the dataset was preprocessed, the full images of mammograms and their respective ROI’s were processed into patches, and those patches were further augmented, only after that did, we start training the breast cancer model. No part of the neural architecture was changed, but the hyper parameters were tweaked, all the hyper parameters. The value of the loss function L, Lclass+Lbox-Lmask RCNN was minimized so that the model could converge and generalize perfectly. There were many experiments, where we tweaked the hyper paraments and then tried training and only after so many educated guesses and on the basis of trial and error method we found the best hyper parameters for our breast cancer model.

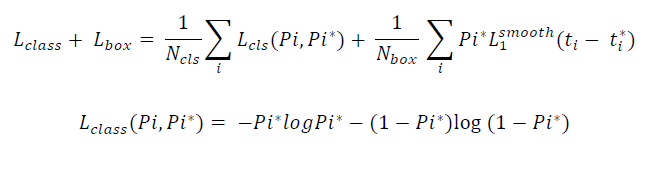

The loss function of Mask RCNN is defined as,

L=Lclass+Lbox+Lmask

Where, Lclass+Lbox are kept the same as they were in the previous version of mask RCNN, Faster RCNN, the Lclass+Lbox are further defined as,

and the mask loss or is the average binary cross entropy, we can represent it as,

As we can see in the Figure 6 the entire architecture of the mask RCNN for our particular case.

Figure 6: Mask RCNN for breast cancer detection.

predictions on the original mammogram image what happened was that, the ROI where the cancer usually is look quite small when we see them 4000 × 5000 pixel dimension, rather what would be efficient and easier is if we split the given mammogram image into 4 patches, and then make prediction on all those 4 patches, where the cancer would surely be in the first 2 or maybe even just 1 patch due (Figures 7-13).

Figure 7: Mammogram image being split into 4 patches for further prediction.

As we can see in the Figure 8, the image is split into 4 patches. And from these patches we have done prediction. Below are the given results side by side with the ground truth and the predicted results from our neural network. Furthermore, there are certain loss values which are an easy way for us and usual researchers to monitor the convergence of mask RCNN as previously discussed in the sub-section 2.3.1, so for our proposed methodology following are the loss value graphs.

Figure 8: This graph shows val_rpn_bbox_loss, between x-axis: Epoch and y-axis: Loss.

Figure 9: This graph shows val_mrcnn_class_loss between xaxis: Epoch and y-axis: Loss.

Figure 10: Ground truths.

Figure 11: the original pictures while below are their corresponding RCNN predicted result.

Figure 12: This graph shows val_mrcnn_bbox_loss between x-axis: Epoch and y-axis: Loss.

Figure 13: This graph shows val_mrcnn_mask_loss between xaxis: Epoch and y-axis: Loss.

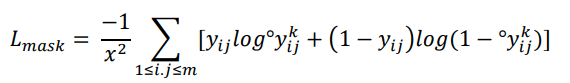

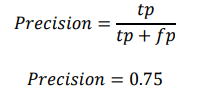

Lastly, for further results, the trained model was given 120 samples of all the cases, the cases were equally divided, meaning 40 benign cases, 40 malignant cases and 40 normal cases, normal meaning that the breast tissue did not have any cancer lesion in it. With these cases in our hand, a confusion matrix was plotted in order to find out the recall and precision of given model. Figure 10 shows a confusion matrix.

Figure 14: Confusion matrix of benign, malignant and normal.

To calculate precision and we call, the True Positive (TP) and False Positive (FP) from the confusion matrix were taken as:

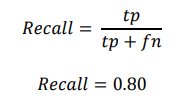

In the same manner, recall can be calculated with the given formula:

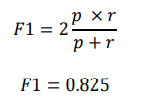

And for the F1 score, we assume that precision=P and recall=r hence

The diagnosis, detection, classification and segmentation of breast tumor using a mammogram is not at all a new concept, in fact this has been done and implemented using CAD (Computer Aided Diagnosis) for many years, we can see in this research that the researchers have reviewed the use of computers and many non-machine learning techniques in order to detect and classify the cancerous tumor inside the breast. But it wasn’t as effective as machine learning and deep learning techniques, in addition to that we can see the excellence and results that deep learning has produced hence due to these past merits of deep learning we can say that due to that people now have a sense of reliability over these computer science practices being incorporated into professional medical usage. The image based AI (Artificial Intelligence) has flourished after the introduction of deep learning techniques such as convolution neural network, throughout the history we can see a rapid growth in the medical image analysis due to the advancements due to convolution neural network. It was due to the natural curiosity that people started applying CNN in almost every field, whether it be for simple object detection, autonomous cars or medical image analysis and CNN showed promise in each one of these fields, which is the reason why our research exists. It was not very far when we started solving problems such as pancreas classification, prostate cancer classification, retinal blood vessel segmentation, cell nucleus classification using mask RCNN there are numerous other researchers where the researchers have incorporated some deep learning technique or convolution neural network algorithm to detect, classify and/or segment the given images we can see them here. When we first started our research all we knew about was that a convolution neural network could be used for things such as classification, soon when we saw its applications then we came to know that it could be implemented to solve some medical imagery based problem, after some research we found some interesting researchers like these are the researches that have used convolution neural network to classify and segment the cancerous tumor, though all these researches are quite different than the other, for instance take they use CNN to detect and classify breast cancer tumor in mammogram images, when it comes to preprocessing they have taken out the patches of those mammograms and then they gave those patches to CNN, but when we see other research that work with deep learning technique for achieving breast cancer detect and/or classification then we see them using full images of mammograms along with some other preprocessing technique such as. While doing our research to find the best fit CNN for us, we came by the U-net architecture of convolution neural network, these are most widely used for medical image analysis. We saw some interesting papers such as, in the researchers were able to classify and segment the cancerous tumor on mammogram images; they also customized and proposed a neural architecture which is an extended version of U-net which they call attention U-net [16-19]. In the other research they had made use of breast MRI images that had some cancerous lesions development in them, they have also localized and segmented the region in MRI images where the cancer was being developed. We also went past some researches that had solved the breast cancer segmentation and/or classification, they had used different encoder and decoder in CNN architecture while using MRI based breast images, and we also made sure that we had seen some normal artificial neural networks solving the breast cancer classification and/or segmentation and so we found two of these researches where in is an older research that make use of mammogram images and a normal ANN (Artificial Neural Network) to predict the type of breast cancer, while makes use of traditional ANN(Artificial Neural Network) to detect and classify the breast cancer in mammogram images. In the case of, we can clearly see that the research is quite mature and they have pretty such solved the breast cancer detection, classification and segmentation task using what they call attention U-net, but all at the same time their research is quite hard to implement, for it to implement one would have to literally code each neural network along with other aspects of it, the only good thing is that they make use of full mammogram images meaning that you won’t have to preprocess the data that much, for the sake of future improvements and even if some curious researcher was going to implement same neural architecture but for different dataset classification and segmentation then that would be tedious task and on top of that one would need a powerful GPU to do that, an E2 instance would also cost quite a few dollars to keep the network running for training. Hence we then came by paper, where they had implemented a mask RCNN for breast cancer classification, localization and segmentation, basically this is what a mask RCNN does as we have discussed before in Mask RCNN section, on top of that we saw no other research that had made use of mask RCNN in order to segment breast cancer on DDSM breast mammogram images, hence it was a novel task at hand, and in addition to all that, a mask RCNN is an easy to implement neural network which has a fast training capability as well, it is easy to implement because we have numerous frameworks that have already implemented them such as for tensor flow has done it. When we started training this neural network we saw that rather than giving the neural network full mammogram images and make the training slow due to the enormous dimensions of a mammogram image why don’t we feed it patches of those mammograms and corresponding ROI (Region of Interest) and we did, we then took out the patches as we had seen before, in they also had taken out the patches in a very similar fashion because they also wanted the training to be easy and fast, by doing so we were able to take out patches of 256 × 256 making our network training significantly easier and not just that we have given a novel approach to classify, localize and segment a breast cancer and you can take almost any cancer, take out the patches from those images and train the network using tensor flow or Py torch it is just that easy.

Future improvements

There’s certainly much room for improvement in this research, even though while doing literature review for this research we did not find previous research where the researchers might have used mask RCNN in order to detect, classify and/or segment breast tumor regions using mammograms, hence it would be much exciting for us to see the future of this research. For anyone who would want to fine tune or would want to re-create these results, Table 1 shows the hyper parameters for tensor flow based mask RCNN framework, in the given Table 1 I have not mentioned default hyper parameter values, only the ones that were changed are listed below.

| Batch_size | 500 |

|---|---|

| Detection_max_instances | 100 |

| Detection_min_confidence | 0.7 |

| GPU_count | 2 |

| Images_PER_GPU | 2 |

| Learning_rate | 0.001,0.0 |

| 001, | |

| 0.00001 | |

| Num_classes | 2 |

| Steps_per_epoch | 500 |

| Train_rois_per_image | 512 |

| Weight_decay | 0.001 |

| Use_mini_mask | FALSE |

| Validation_steps | 500 |

Table 1: The hyper parameters for tensor flow based mask RCNN framework.

In conclusion mask RCNN is proven to be an efficient method by which we can detect, classify and segment the breast cancer tumor using just the mammogram images. With an overall loss of 1.238 we can say that the RCNN converged pretty well with the given dataset, while having an overall accuracy of 85%, making it a useful methodology in breast cancer segmentation. We hope that in the future research would implement the same technique and neural network on other case studies and problems, especially in the nurturing field of bio medical.

Acknowledgements this research was done under the supervision of Dr. Najeed Ahmed. The resources were provided by CSRD (Center for Software Research and Development) lab.

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

Citation: Raza SK, Sarwar SS, Syed SM, Khan NM (2023) Classification and Segmentation of Breast Tumor using Mask R-CNN on Mammograms. J Tumour Res. 9:204.

Received: 23-Sep-2022, Manuscript No. JTDR-22-19343; Editor assigned: 26-Sep-2022, Pre QC No. JTDR-22-19343 (PQ); Reviewed: 10-Oct-2022, QC No. JTDR-22-19343; Revised: 02-Jan-2023, Manuscript No. JTDR-22-19343 (R); Published: 29-Sep-2023 , DOI: 10.35248/2684-1258.23.9.204

Copyright: © 2023 Raza SK, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution and reproduction in any medium, provided the original author and source are credited.