Journal of Biomedical Engineering and Medical Devices

Open Access

ISSN: 2475-7586

ISSN: 2475-7586

Research Article - (2017) Volume 2, Issue 3

This paper presents design of system for access control using heart sound biometric signature based on energy percentage in each wavelet coefficients and MFCC feature. A collection of 40 heart sounds records for different persons from internet, for each recording signatures were calculated during two heart cycles after denoising of each recording. A Euclidean distance is used as classification tool between the signatures. It was found that the distances for different recordings at different times for the same person are below a threshold value and above the threshold value as compared with the signatures of the other persons. A software based on MATLAB was used for make GUI that was received recordings, denoising, signature calculations and classification, Then RS232 was interfaced between GUI and simulation circuit that consist of ATMEGA 16 (microcontroller), which was programmed via Bascom-AVR then Proteus was used to verify the code, it was allowed to access control system or not (verification mode) depending on person how had record that to prove the implemented system.

Keywords: Heart sounds, Access control, Biometric, CWT, MFCC, Euclidean distance, GUI, Verification

FFT: Fast Fourier Transform; STFT: Short Time Fourier Transform; CWT: Continuous Wavelet Transform; HS: Hilbert Spectrum; MFCC: Mel Frequency Cepstral Coefficient; PCG: Phono Cardio Gram.

Security is the degree of resistance to, or protection from, harm. Because it is so important the ID card and password techniques was found to do these task, but because it is weak and in the absence of robust personal recognition schemes, these systems are vulnerable to the wiles of an impostor [1]. To avoid fraud the biometric techniques was found. Biometrics, are physiological or behavioral characteristics extracted from human subjects e.g., finger print, iris, face, voice and heart sounds, are used for identity and verification recognition [2,3]. By using biometrics, it is possible to confirm or establish an individual’s identity based on “who he/she is”, rather than by “what he/she possesses” (e.g., an ID card) or “what he/she remembers” (e.g., a password) [2].

Biometric system is the system in which a person is identified by means of his distinguishing characteristics or quality basically one belonging to a person. Recognizing humans using computers not only provide some best security solutions but also help to efficiently deliver human services. Biometric authentication system associates behavioral or physiological attributes to identify or verify a person. Physiological characters are based on bodily features, like fingerprint, iris, and facial structure etc. Behavioral traits are based upon unique behavioral characteristics of an individual, like voice, signature etc. [4].

With progress of technology, security system requires reliable personal recognition schemes to either confirm or determine the identity of an individual requesting their services. The purpose of such schemes is to ensure that the rendered services are accessed only by a legitimate user and no one else. Likewise, Human heart sounds are natural acoustic signals conveying medical information about an individual’s heart physiology. Provides a reliable biometric for human identification, Heart sound comes under physiological traits because it is a natural sound created by the opening and closure of valves present in the heart [1].

In recent years, it has become very important to identify a user in applications such as personnel security, defense, finance, airport, hospital and many other important areas [5]. So, it has become mandatory to use a reliable and robust authentication and identification system to identify a user. Earlier the methods for user identification were mainly knowledge-based such as user password or possessionbased such as a user key; but due to vulnerability of these methods it was easy for people to forge the information. Hence, the performancebased biometric systems for identification, where a user is recognized using his own biometrics. Biometrics uses the methods for recognizing users based upon one or more physical and behavioral traits. Hence, conventional biometric identification systems such as iris, fingerprint, face and speech have become popular for user identification and verification [6].

A biometric system is essentially a pattern recognition system that operates by acquiring biometric data from an individual, extracting a feature set from the acquired data, and comparing this feature set against the template set in the database. Depending on the application context, a biometric system may operate either in verification mode or identification mode [3]. Verification mode consists in verifying whether a person is who he or she claims to be. It is called a” one to one” matching process, as the system has to complete a comparison between the person’s biometric and only one chosen template stored in a centralized or a distributed database [7].

Heart sounds

Human heart sounds are natural signals, is a synchronized sequence of contractions and relaxations of the atria and ventricles during which major events occur, such as valves opening and closing and changes in blood flow and pressure. Each contraction and relaxation is referred to as systole and diastole, respectively. which can be heard normally by using the stethoscope. There are four main heart sounds, called S1, S2, S3 and S4. Normally, only two sounds are audible, S1 and S2 sounding like the words “lub-dub”. S3 and S4 are extra heart sounds heard in both normal and abnormal situations [8]. The first heart sound is produced mainly by the closure of the mitral and tricuspid valves. The second heart sound is produced by the closure of the aortic and pulmonary valves.

Design and simulation

Here an approach based on both energy and power spectrum properties of the heart sounds signal are developed for analysis of this signal for application in human recognition system. The proposed method divided in to two parts: software and hardware.

Due to the non-stationary nature of the heart sounds signal, the continuous wavelet transform was used, although a redundant transformation, but its redundancy tends to reinforce the traits and makes all information more visible, where the wavelet coefficients respond to changes in the waveforms strongly providing the required features of the signal. This approach solved the STFT’s resolution problem by analyzing the signal at different frequencies with different scales, it is designed to give good time resolution and poor frequency resolution at high frequencies and good frequency resolution and poor time resolution at low frequencies. Therefore, it made sense when the signal at hand had low frequency components for long durations [2- 34]. The mother wavelet used here is the MORLET wavelet function. It is more suitable to use because it is symmetrical, more compact (compressed) and also decays to zero fast, provides better frequency resolution, and determines accurately the frequencies of the heart sounds [34].

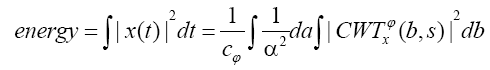

Here, one of parameters chosen to be extracted from the heart sounds signal as a feature is the energy of the sound. The energy is preservative for the transform. So the following equation is tenable:

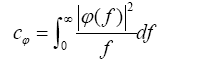

This equation represents the total energy of a domain centered at (b, a) with scale interval Δa and time interval Δb. |CWT(b, a)|2 is defined as the wavelet scalogram. It shows how the energy of the signal varies with time and frequency, Cψ is known as admissibility constant, and is determine by:

where, ψ (f) is the Fourier transform for wavelet function and f is central frequency fc [34]. The previous formula was applied to calculate energy for all coefficients, then, using the values to calculate total energy for each scale sample.

Feature extraction is a special form of dimension reduction, which transforms the input data into the set features. Heart sound is an acoustic signal and many techniques used nowadays for human recognition tasks borrow speech recognition techniques. The best and popular choice for feature extraction of acoustic signals is the Mel Frequency Cepstral Coefficients (MFCC) which maps the signal onto a Mel-Scale which is non-linear and mimics the human hearing. MFCC system is still superior to Cepstral Coefficients despite linear filterbanks in the lower frequency range. The idea of using Mel Frequency Cepstral Coefficients (MFCC) as the feature set for a PCG biometric system comes from the success of MFCC for speaker identification [35] and because PCG and speech are both acoustic signals. MFCC is based on human hearing perceptions which cannot perceive frequencies over 1 KHz. In other words, in MFCC is based on known variation of the human ear’s critical bandwidth with frequency [23]. MFCC has two types of filter which are spaced linearly at low frequency below 1000 Hz and logarithmic spacing above 1000 Hz. Mel-frequency cepstrum coefficients (MFCC), which are the result of a cosine transform of the real logarithm of the short-term MFCCs are provide more efficient. It includes Mel-frequency wrap-ping and Cepstrum calculation [23].

Mel-Frequency Cepstrum Coefficients (MFCC) are one of the most widespread parametric representation of audio signals (Davis and Mermelstein). The basic idea of MFCC is the extraction of cepstrum coefficients using a non-linearly spaced filterbank; the filterbank is instead spaced according to the Mel Scale: filters are linearly spaced up to 1 kHz, and then are logarithmically spaced, decreasing detail as the frequency increases. This scale is useful because it takes into account the way we perceive sounds. The relation between the Mel frequency fmel and the linear frequency fin is the following [35]:

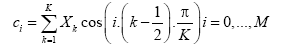

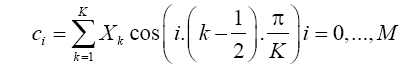

Some heart-sound biometry systems use MFCC, while others use a linearly-spaced filterbank. The first step of the algorithm is to compute the FFT of the input signal; the spectrum is then feeded to the filterbank, and the i-th cepstrum coefficient is computed using the following formula:

where, K is the number of filters in the filterbank, Xk is the logenergy output of the k-th filter and M is the number of coefficients that must be computed [36].

Software

Pre-processing: The purpose of this step is to eliminate noise and enhance heart sounds, making them easier to segment. High quality signals are essential for correct identification. Unfortunately, the presence of noise in heart sounds signals is inevitable. Even when all background noise is minimized there are always intrinsic sounds impossible to avoid: respiratory sounds, muscular movements and so on. Therefore, the de-noising stage is extremely important, ensuring elimination of noise and emphasizing relevant sounds [33].

Due to the overlapping nature of noise with the spectra of the heart sounds signal, simple band pass filtering is not effective for noise reduction. However, decomposing the signal in narrower subbandwidths using the wavelet transform enables us to perform the temporal noise reduction for the desired bandwidth sections. The mother wavelet implemented here is the Debauches wavelet of order 5 (db5). The choice is due to the heart beat signal having most of its energy distributed over a small number of db5 wavelet dimensions (scales), and therefore the coefficients corresponding to the heart beat signal will be large compared to any other noisy signal. Segmentation. The purpose of segmentation is to separate all the cardiac cycles in a recording so that each can be analyzed individually. Here the segmentation was done manually and two cycles where selected from each sound [36-43].

Feature extraction: Calculating the signatures for two heart cycles (Energy and MFCC).

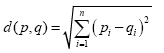

Classification: In this stage the feature of the sound is compared with features stored in the database, and the distance (error) is calculated by using a method called Euclidean distance.

Euclidean distance is the distance between two points, determines by:

where, d is Euclidean distance, p and q are the arrays and n is the dimension of arrays.

Hardware

Control unit: It consists of two microcontroller units, main microcontroller unit and slave micro controller unit. Main microcontroller unit was received data from GUI via interface unit and display result and send data to slave microcontroller unit if hacker was happened. Slave microcontroller unit was receiving data from main microcontroller unit when hacker was happened and make red visible alarm and displayed message.

Interface unit: It consists of serial port (RS-232) which use as connect point between software part and control unit, it used to send data from computer to microcontroller unit.

Display unit: It consists of two LCDs, display screen for main MCU and display screen for slave MCU, it was used to display the results in different cases.

Indicator unit: It consists of two LEDs and alarm sound, it works in verification mode. Control access LED has blue color and it lighted if person can allow to access system and connected with main MCU. Hacker indicator LED has red color and it worked if person tried to hacker and enter the system and connected with slave MCU. Alarm sound consists of buzzer which makes aloud sound if hacker was happened it connected with main MCU, it worked by supply with 12 v but the voltage which out from microcontroller is 5 v for this reason used l293d driver to convert 5 v to 12 v to work the buzzer [44-50].

Results and Discussion 1. The graph for two signatures (for the same person) shows the matching of the signatures for the same person while there is a clear difference with the other person’s signature as shown in Figures 16-18 for energy signature (Figures 1-25),

v

2. MFCC signature gave better results than energy signature,

3. Threshold of Energy signatures equal (9),

4. Threshold of MFCC signatures equal (4).

In this project, we have investigated the possibility of using heart sound as a biometric signature used for human identification and verification. The most important feature in using the heart sound as a biometric is that it cannot be easily simulated or copied, as compared to other biometrics. The main unique property of the heart sounds beyond their application for human identification is their ability to reflect information about the heart’s physiology.

Ethics Approval and consent to participate: I have 40 heart sound records for different person.

Yes, I have heart sound records for different persons at different times collected it from internet.

Funding: Not Applicable.

The authors declare that they have no competing interests.

HM carried out the heart sound and biometric studies, MATLAB code, design of the study and performed the statistical analysis and sequence alignment and drafted the manuscript. AH carried out the computational studies, participated in the design of the study and sequence alignment. AB participated in its design and coordination and helped to draft the manuscript. All authors read and approved the final manuscript.