International Journal of Advancements in Technology

Open Access

ISSN: 0976-4860

ISSN: 0976-4860

Review Article - (2022)Volume 13, Issue 8

This review paper explores the concepts of human centered AI. It basically considers the various theoretical principles, theories, and paradigms. It further explores the various works in the field of human centered AI and the opportunities after a structured literature survey was done on a number of online journal databases. Using the PRISMA model, we screened available literature and further categorized them into various classifications. This paper agrees to the notion that there is an intrinsic need to balance human involvement with increasing computer automation. This is relevant in achieving fair, just and reliable systems especially in the era of chatbots and other AI systems. The related works also throws more light on various applications that revolve around the concept of human centered AI. Among the host of research suggestions for future work, we recommend a further extension of the 2-dimensional human-computer autonomy by Shneiderman. We further recommend more commitment and attention to balance human control over contemporary intelligent systems.

Artificial Intelligence; Human centered AI; Autonomy.

Artificial Intelligence (AI) is a discipline of computer science that deals with the research and construction of algorithms that can display behaviours and accomplish tasks that would need some intelligence from a person to complete [1]. AI principles and approaches have been widely included in current computer systems, from Siri to Alexa and a slew of others. Various AI viewpoints have recently proposed that AI technology would replace human labour in a variety of industries, rendering current human resources obsolete as workers [2]. The possibility of AI systems gaining autonomy has long provoked alarm and debate among many schools of thought. Biased decision-making owing to insufficient or inaccurate data intake is another risk. These concerns prompted the establishment of a number of human- centered AI research labs to investigate how a more human- centered approach may be included into the development of current AI systems [3]. The notion of Human-Centered Artificial Intelligence (HCAI/HAI) was born because of the need of human involvement in the development of such systems.

Human-centered AI is a branch of AI and machine learning that believes that intelligent systems should be built with the understanding that they are part of a wider system that includes human stakeholders such as users, customers, operators, and others [1]. The importance of AI and HAI principles in numerous applications cannot be overstated. Education [4], robotics [5], AI in agriculture, medicine, energy, circular economy, smart cities, smart grids, autonomous cars, and smart home appliances are only a few examples of AI applications.

The first section of this report delves into the theoretical principles, theories, and paradigms that define HAI. As a review, this paper takes a cursory tour on related works in the field of HCI to throw more light on the advances being made. This was achieved by carefully gathering literature from a series of journal databases with the sole aim of tracking recent works in HAI. The inclusion criteria predominantly bothered on relevance and accessibility with respect to the underlying theme of the research [6].

The objectives of this paper include-

• Identify literature on human centered AI

• Categorize human centered AI based on the literature search

• Present results of categorization in tabular and graphical formats.

• Present future directions of human centered AI

Theoretical frameworks and concepts

These are basically the main schools of thinking that have formed the field of HAI throughout time. In response to concerns of unmanageable AI autonomy, colleges like as Stanford University, UC Berkeley and MIT have established HAI centres.

To begin with, Stanford University believes that AI research and development should be guided by three goals: To use more versatility, nuance, and depth of human intelligence to better serve our needs; to develop AI in accordance with ongoing research of its impact on human society that will serve as a guide; and, finally, to enhance humanity rather than replace or diminish it [7]. The core of these goals is to make sure that all AI breakthroughs are human-centered and based on the principles of intelligence, relevance, and justice. The HAI focused group, dubbed the center for human-compatible artificial intelligence, aims to “create the conceptual and technological capabilities to refocus the broad trend of AI research towards provably helpful systems.”

Essentially, all rules and goals relating to HAI development revolve on achieving high degrees of human control as well as high levels of automation in order to produce RST (Reliable, Safe, and Trustworthy) systems or applications [8]. These principles might be used by AI systems that aren’t fully understood to boost human performance.

Levels of autonomy

The idea of autonomy is perhaps the most crucial factor applicable to present HAI systems. Sheridan and Verplank (1978) worked extensively with computing in the context of human control. Table 1 summarizes the 10-point automation level provided by Sheridan and Verplank (1978), while Table 2 refines it into a 4-point level of automation, as stated by [9,10]. The 10-point automation level, as shown in Table 1, progresses from little or no computer aid in the execution job to a high degree of almost total control of the computer. The growing amount of automation is directly proportional to the extent of computer automation. The 10-point automation level is a one-dimensional method to automation that can only accomplish one goal. That is the computer’s degree of control with less human control. Figure 1 depicts the one-dimensional amount of autonomy as sculpted by [11] through time and strengthened in [12].

| Description | |

|---|---|

| High | 10. The computer makes all decisions and operates independently of humans. |

| ↑ | 9. The computer only tells the human if it, the computer, makes the decision to do so. |

| 8. Only when the human asks if the computer informed or | |

| 7. The computer performs automatically, informing the person as a result. | |

| 6. Before automated execution, the computer gives the person a limited amount of time to exercise his or her right of veto. | |

| 5. If the human agrees, the computer will carry out the idea. | |

| 4. One option is suggested by the computer, or | |

| 3. The computer reduces the number of options to a handful, or | |

| 2. The computer provides a comprehensive set of decision/action options, or | |

| low | 1. The computer provides no aid; all choices and actions must be made by the human. |

Table 1: The 10-point 1-dimensional levels of autonomy by sheridan- verplank.

| Level | Description |

|---|---|

| 1 | Information acquisition |

| 2 | Analysis of information |

| 3 | Decision or choice of action |

| 4 | Execution of action |

Table 2: The 10-point 1-dimensional levels of autonomy by sheridan- verplank.

Figure 1: One-dimensional autonomy indicating a two alternative design choice between human control and computer control (Misleading).

The one-dimensional notion has proven effective in a variety of fields, with further developments, most notably by the US society of automotive engineers. In terms of self-driving automobiles, they recommended a seven-level automation blueprint. It was discovered that the implementation was based on a one- dimensional level of autonomy, which appears to be deceptive. Table 3 outlines the US society of automotive engineers’ seven- point automation scale. A higher number represented full computer automation, with the driver having less control, while a lower number represented more human control with less automation or computer interference, just like the other levels of autonomy. The improved 4-point automation level essentially described the 10-point as an information processing model from collection to analysis to decision-making to execution.

| Level | Description |

|---|---|

| 5 | Full autonomy: In every driving circumstance, it is equivalent to that of a human driver. |

| 4 | High automation: In some places and under specific weather circumstances, fully autonomous cars handle all safety-critical driving duties. |

| 3 | Conditional automation: Under specific traffic or environmental situations, the driver transfers "safety important duties" to the vehicle. |

| 2 | Partial automation: There is at least one automated driving assistance system. The driver is not physically driving the car (hands off the steering wheel AND foot off the pedal at the same time) |

| 1 | Driver assistance: The majority of operations are still managed by the driver, although some (such as steering or acceleration) may be performed automatically by the vehicle. |

| 0 | No automation: The steering, brakes, throttle, and power are all controlled by the human driver. |

Table 3: Levels of automation for the US society of automotive engineers.

The notion of complete computer automation has had its share of failures, particularly in the wake of high-profile tragedies. The downing of two friendly US planes during the Iraq War [13] and the tragic accident of a boeing 737 MAX [14] both slammed the idea of one-dimensional autonomy because the costs were so high. These losses bolster the argument that complete computer autonomy without human oversight is insufficient to ensure system success.

HCAI framework for reliable, safe and trustworthy systems

The idea of Reliable, Safe, and Trustworthy (RST) systems was developed in order to produce AI systems that assure high computer automation as well as high computer control [8]. It’s worth noting that one of the main goals of RST systems is high performance. RST systems are built on three pillars-

1. Sound technological standards that promote system dependability.

2. Management methods and tactics that promote and maintain a safety culture.

3. The presence of independent supervision mechanisms that guarantee confidence.

The concept of dependability is based on past system performance analysis using audit trails [15]. Management strategies and actions determine safety. Trustworthy organizations such as government agencies and well-known professional associations must be independent and acknowledged [16]. Professionals that use AI systems nearly usually want total control over the system with the least amount of work. The function of human contact must be defined and clarified. This will be a great resource for AI developers, especially those designing and developing AI systems.

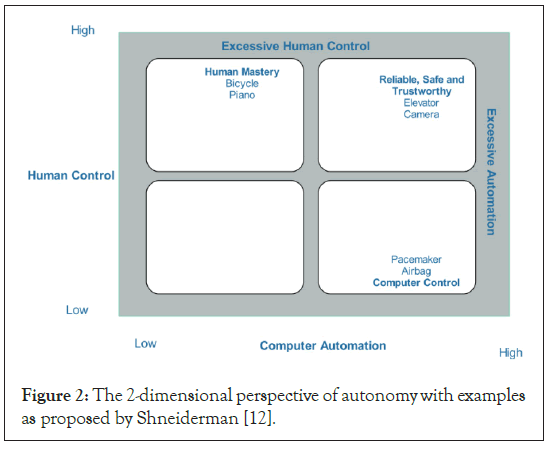

Rather than assuming a one-dimensional view of computer autonomy, we must now analyze other viewpoints. Shneiderman, 2020 proposes a two-dimensional view of autonomy applicable to many AI systems. These include recommender, consequential, and life-critical systems. It considers computer automation and human control. Figure 2 depicts the 2-dimensional framework built by Shneiderman, 2020.

Figure 2: The 2-dimensional perspective of autonomy with examples as proposed by Shneiderman [12].

Human mastery is shown in the diagram above as a point where there is more human control or engagement and much less computer automation. A bicycle is a good illustration since its whole motion is based on human effort. Computer control devices have a higher level of computer automation than devices with a low level of human control. Airbags and pacemakers are two examples of devices that need less human involvement to deploy. Reliable, Safe, and Trustworthy systems are the ideal part of the picture above that encapsulates the core goal of Human Centered AI. It was based on a high level of computer autonomy as well as a high level of human control. This framework functioned as a reference for pain control designs, automobile control designs, and as a foundation for other control designs.

An extend HAI framework

The fundamental foundations and definitions of what makes AI/ML systems execute certain roles are mostly a mystery. Many non-technical users believe that these systems are black boxes, and so have a limited understanding of what really determines their functions [3]. It’s also worth mentioning that certain AI assistants/systems have only excelled at basic tasks, while others have failed at far more essential and sophisticated ones [17]. Accidents involving autonomous vehicles only serve to underscore the need of focusing more on HCI design challenges in order to create viable AI.

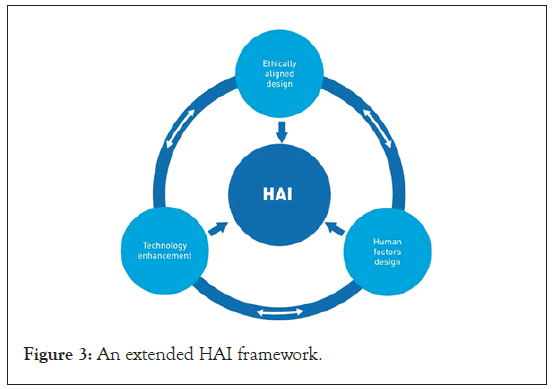

Xu (2019) presented a three-part expanded HAI paradigm based on ethically aligned design, technology that represents human intelligence, and human factors design. Figure 3 depicts the suggested enhanced HAI framework by Xu, 2019.

Figure 3: An extended HAI framework.

In theory, the framework’s morally oriented design provides fairness, reasonability, indiscrimination, and justice in AI development and deployment. Technological advancement focuses on innovation that aims to express human intellect in the most rational and efficient manner possible. AI solutions that give explainable, intelligible, valuable, and useable outputs are the foundation of human factor design. The primary goal of this framework is to encourage a precise and complete approach to AI design, resulting in AI solutions that are safe, efficient, healthy, and rewarding Xu, 2019.

Similarly to Shneiderman, 2020 and Xu, 2019’s expanded framework improves rather than replaces human skills, as well as ensuring that human control is essential, particularly in emergency circumstances. In essence, the HAI paradigms are part of the technology advancement and human-centered design that defines the solution-oriented third wave of AI.

Komischke, 2021, gave a brief summary of Artificial Intelligence (AI) and AI aimed at people (HCAI). An example of how AI and HCAI may be utilized to generate software solutions was shown in the article. The construction of use cases, user interaction, and the development of a smart assistant were all investigated [18].

Many Artificial Intelligence (AI) research initiatives have focused on replacing humans rather than complementing and augmenting them. Fischer (1995) created human-computer cooperation scenarios to show the potential of Intelligence Augmentation (IA) via the use of human-centered computational devices. Focusing on IA rather than AI necessitated the development of new conceptual frameworks and techniques. Shared representations of context and purpose for understanding, mixed-initiative dialogs, problem management, and the integration of working and learning were all part of their approach. They focused their system development efforts on the unique qualities of computational media rather than imitating human talents. They created-

• Specific system architectures, such as a multifaceted architecture for characterizing design environment components and a process architecture for the evolution of such environments

• Specific modules, such as critiquing systems,

• A wide range of design environments for different application domains [19].

Technology has made “AI in HCI” a burgeoning study topic with contributions from many academic institutions. At the inaugural AI in HCI conference, researchers discussed current and future research initiatives. To assess the existing and future research environment for AI in HCI, and to develop a global network of researchers in this subject. The four-and-a-half hour remote and interactive education, 20 researchers attended. Individual study concepts were organized into eleven research topics. Participants chose 11 categories. Those were the most popular. Each of the three highest-scoring categories was then explored in detail. On July 24, 2020, the topic was “Trust.” Others were improved after two sessions. Degen and Ntoa (2021) summarized the workshops’ conclusions and related them to previous field reports. The framework developed by Degen and Ntoa, 2021 creates trustworthy AI-enabled technologies [20].

The COVID-19 pandemic has increased the visibility of contactless interactions with consumer IoT devices. Speech biometrics will become more significant as companies move away from shared touch devices. To authenticate customers, prevent fraud, and reset passwords, vocal biometrics has been used to evaluate a person’s pitch, speech, voice, and tone. However, little study has been done on consumers, particularly on the importance of trust in promoting use and acceptance of these services. Kathuria, et al., 2020 used models from psychology (Theory of Planned Behavior) and technology (Human-Centered Artificial Intelligence) to investigate the many antecedents of consumer trust for voice authentication (Ease of use, self-efficacy, perceived usefulness, reliability, the perceived reputation of the service provider, perceived security, perceived privacy, fraud, and social influence). The usage of vernacular speech, two-step authentication, and trust are all thoroughly examined. Included in speaker identification are frequency estimates, hidden Markov models, Gaussian mixture models and pattern matching techniques. According to Kathuria, et al., 2020, the components that build trust for voice recognition will be emphasized via custom made prototypes, use scenarios, and qualitative and quantitative research. According to an early survey, people trust Voice Biometrics because of security, privacy, and reliability. Early use of speech biometrics for transactional and low-value financial transactions may assist the speech biometrics ecosystem [21].

Researchers could discuss new opportunities for incorporating modern AI methods into HCI research, identify important problems to investigate, showcase computational and scientific methods that can be used, and share datasets and tools that are already available or suggest those that should be developed further at Li, et al., 2020. Among the topics they were interested in were deep learning methods for understanding and modelling human behaviours, hybrid intelligence systems that combine human and machine intelligence to solve difficult tasks, and tools and methods for interaction data curation and large-scale data-driven design. They discussed how data-driven and data- centric approaches to existing AI might affect HCI at the heart of these concerns [22].

565 individuals were asked to rate artworks on four aspects in a large-scale experiment published in 2020 by Ragot, et al. liking, perceived beauty, originality, and relevance. The priming effect was assessed using two between-subject conditions: artworks created by AI vs. artworks created by a human artist. Finally, paintings attributable to humans were held in far higher respect than paintings credited to artificial intelligence. As a result of employing such a technology and sample size in an unprecedented method, the findings show a negative bias in perception of AI and a preference bias in favor of human systems [23].

Schmidt defined “Interactive Human Centered Artificial Intelligence” in the year 2020, as well as the features that must be present. People need to maintain control in order to feel safe and independent. As a result, human administration and monitoring, as well as tools enabling humans to comprehend AI- based systems, are necessary. In their research, they argue that degrees of abstraction and control granularity are a generic answer to this problem. We must also be clear about why we want AI and what the research and development aims are for AI. It’s critical to define the characteristics of future intelligent systems, as well as who will profit from such a system or service. In 2020, AI and machine learning, according to Schmidt, will be on par with raw commodities. These materials had a major impact on what people could create and what tools humans could design, which is why they are called after historical times. As a result, he argues that in the AI era, we need to move our attention away from the material (e.g., AI algorithms) and toward the tools and infrastructures that enable and benefit people. Artificial intelligence will most certainly automate mental drudgery while simultaneously increasing our ability to comprehend the environment and predict events. In 2020, Schmidt’s most important goal will be to figure out how to construct these technologies that boost human intelligence without jeopardizing human values [24].

The NCR Human Interface Technologies Center (HITC), according to MacTavish and Henneman (1997), exists to address its clients’ business requirements via the deployment of innovative human-interface technologies. The HITC uses a User-Centered Design (UCD) methodology to create and develop these user- interface solutions, in which user requirements and expectations drive all design and development decisions. The HITC is made up of more than 90 engineers and scientists with backgrounds in cognitive engineering, visual design, image interpretation, artificial intelligence, intelligent tutoring, database mining, and innovative I/O technologies. The HITC was founded in 1988 and is supported entirely by the work it does for its clients [25].

Chromik, et al., 2020 created a DSS for money management in the construction business using a human-centered design approach with domain experts, and they discovered a key requirement for control, personal contact, and enough data. They compared the system’s predictions to values provided by an Analytic Hierarchical Process (AHP), which indicates the relative importance of our users’ decision-making criteria, in order to obtain an adequate level of confidence and reliance. They created a prototype and put it through its paces with seven building specialists. The DSS may be able to discover persuasion gaps and apply explanations more carefully by recognizing circumstances when the ML prediction and the domain expert vary. Their study yielded encouraging results, and they want to apply our approach to a broader variety of explainable artificial intelligence (XAI) problems, such as providing user-tailored explanations [26].

Since its inception in the 1960s, computer-generated algorithmic art has gone a long way. Artists continue to adapt, take chances, and study how computers could be regarded as a creative medium as art and technology become more intertwined in the twenty-first century. Amerika, et al., 2020 focuses on the idea of creative cooperation between human and machine-generated representations of poetry expression in possible forms of artificial intelligence. Artificial Creative Intelligence (ACI) is a fictitious AI poet whose spoken word poetry foreshadows the emergence of a new kind of authorship, posing philosophical questions regarding AI’s conceptual implications for creative practitioners [27].

According to Williams, 2021, robots are a distributive technology that encourages change. human-robot interaction is a new area with a lot of potential for worldwide change. Incorporating social intelligence into the design of human-robot interactions is now a pipe dream. Moonshots may help science and engineering by motivating and encouraging research organizations and enterprises to take on apparently impossible tasks and broaden their horizons. Power and influence, privacy and trust, ethical judgments, and enjoyment are all aspects of social intelligence. What if robots took the initiative and tried to persuade you to think differently? HRI combined with social intelligence has the potential to lead to game-changing discoveries, reshaping the way people and computers interact in business and society [28].

People may communicate with one another via conversation, which is the most natural method of communication. Building a human-centered web intelligence paradigm, in which web intelligence engines are based on human society, requires discussion, according to Nishida (2007). They were working on a computational framework for transmitting information in a conversational manner, encapsulating conversational circumstances in packets of data called conversation quanta. Technologies are being developed to gather conversation quanta fast, store them in a visually identifiable format, and reuse them in a location-specific manner. A theoretical framework for monitoring, analysing, and modelling talks is conversational informatics. They discussed current advancements in Conversational Informatics that will aid us in achieving our goals. They also spoke about our method in the context of Social Intelligence Design, which aims to promote social intelligence for group problem solving and learning [29].

Misuse, abuse, and disuse of technology are all based on lack of faith in AI. But what is AI trust at its core? What are the trust cognitive process’s aims and goals, and how can we encourage or assess their achievement? Jacovi et al., 2021 set out to solve these issues. They examine a trust model that is based on interpersonal trust but differs from it. Their technique relies on two key elements: the user’s vulnerability and the AI model’s ability to forecast the outcome. Also, trustworthiness is formalized, including terms like “warranted” and “unwarranted” trust, which is the idea that an implicit or explicit contract between a user and an AI model will be respected. They look at how to build trustworthy AI, how to assess trustworthiness, and why trust is merited, both within and externally. Finally, they formalize the link between trust and XAI [30].

In domains ranging from healthcare to criminal justice, Artificial Intelligence (AI) is increasingly assisting high-stakes human judgements. As a consequence of this, the field of explainable AI has blossomed (XAI). By diving into the philosophical and psychological origins of human decision-making, Wang, et al., 2019 tried to deepen empirical application-specific assessments of XAI. They proposed a conceptual framework for building human- centered, decision-theory-driven XAI based on an extensive evaluation across many areas. They show how human cognitive processes lead to the need for XAI, as well as how XAI may be used to reduce common cognitive biases utilizing this method. To put this paradigm into reality, they built and implemented an explainable clinical diagnostic tool for critical care phenotyping, as well as a co-design exercise with clinicians. They then figured out how this framework links algorithm-generated explanations to human decision-making theories. Finally, they discussed the implications of this for XAI design and development [31].

Corneli, et al., 2018 employed design patterns as a medium and technique for obtaining information on the design process, signalling a clear meta-level change in emphasis. They examined popular design genres and proposed some theories about how they can impact the development of intelligent systems [32].

Recent years have seen a fast expansion in the use of automation and Artificial Intelligence (AI), including text classification, increasing our reliance on their performance and reliability. But as we grow increasingly reliant on AI apps, their algorithms get more complex, intelligent, and harder to comprehend. Medical and cybersecurity professionals should keep up with text classification. Human specialists struggle to deal with the sheer volume and speed of data, while machine learning techniques are frequently incoherent and lack the context essential to make sound decisions. For example, Zagalsky, et al., 2021 offer an abstract Human-Machine Learning (HML) configuration that emphasizes mutual learning and cooperation. In order to learn about and construct an application for the HML setup, they used Design-Science Research (DSR). The conceptual components and their functions define HML configuration. The development of Fusion, a technology that allows humans and robots to learn from one other, is then detailed. With two cyberworld text classification case studies, they examine fusion and the suggested HML approach. Our results imply that domain experts may enhance their ML classification performance by collaborating with humans and computers. In this way, they helped researchers and developers of “human in the loop” systems. On the topic of human-machine cooperation, they discussed HML installations and the problems of gathering and sharing data [33].

No one understands how to bridge the gap between traditional physician-driven diagnostics and an AI-assisted future, despite the promises of data-driven Artificial Intelligence (AI). How might AI be meaningfully integrated into physicians’ diagnostic procedures, given that most AI is still in its infancy and prone to errors (e.g., in digital pathology)? Gu, et al., 2021 propose a set of collaborative techniques for engaging human pathologists with AI, taking into account AI’s capabilities and limitations, on which we prototype Impetus, a tool in which an AI takes varying degrees of initiative to assist a pathologist in detecting tumors from histological slides, based on which we prototype Impetus. Future research on human-centered medical AI systems is discussed, as well as the findings and lessons learnt from an eight-pathologist study [34].

Porayska-Pomsta, et al., 2018 contributed to the expanding body of knowledge in the area of AI technology design and usage in real-world circumstances for autism intervention. Their work, in particular, highlights key methodological challenges and opportunities in this area by leveraging interdisciplinary insights in a way that-

• Bridges educational interventions and intelligent technology design practices.

• Considers both the design of technology and the design of its use (context and procedures) on equal footing.

• Includes design contributions from a variety of stakeholders, including children with and without ASC [35].

To establish ground truth and train their algorithms, many research depend on public datasets (82%), as well as third-party annotators (33%). Finally, the vast majority of the results of this research (78%) relied on algorithmic performance evaluations of their models, with just 4% evaluating these systems with real customers. They recommended computational risk detection researchers to utilize more human-centered techniques when creating and testing sexual risk detection algorithms to guarantee that their essential work has larger social repercussions [36].

Artificial Intelligence (AI) is gaining popularity in service- oriented organizations as chatbots. To converse with individuals using natural language processing, a chatbot is used. People are divided on whether or not a chatbot should speak and act like a person. As a result, Svenningsson and Faraon, 2019 developed a set of characteristics related to chatbot perceived humanness and how they may contribute to a pleasant user experience. These include avoiding small talk and speaking in a formal tone; identifying oneself as a bot and asking how it can help; providing specific information and articulating using sophisticated word choices and well-constructed sentences; asking follow-up questions during decision-making processes; and apologizing for misunderstandings and asking for clarification. These results may impact AI designers and larger conversations about AI acceptance in society [37].

Using AI and machine learning to better the lives of the elderly is crucial in smart cities. Several Ambient Assisted Living systems have Android apps. Thus, Ambient Assisted Living privacy and security depend on Android applications that maintain privacy. Assuming the elderly are incapable of decision-making, the privacy self-management paradigm threatens their privacy and welfare. Elahi, et al., 2021 used human-centered AI to solve these issues. Shared responsibility replaces self-management in this technique. To create suitable privacy settings and evaluate runtime Permission requests, they created Participatory Privacy Protection Algorithms I and II. Each algorithm has a case study. Compared to existing android app privacy protection methods. These algorithms provide cognitive offloading while protecting users’ privacy [38].

Artificial intelligence (AI) and machine learning (ML) are becoming more popular among humans. Human-centered AI is an AI and machine learning method that emphasizes the importance of developing algorithms as part of a larger system that includes people. Human-centered AI, according to Riedl, may be split into two categories-

• AI systems that understand people from a sociocultural standpoint.

• AI systems that help humans in grasping them.

They go on to say that social responsibility concerns like fairness, accountability, interpretability, and transparency are critical [1].

The inevitable existence and development of Artificial Intelligence (AI) was not anticipated. The more AI’s impact on humans, the more important it is that we comprehend it. Their study [6] looks at AI research to determine whether there are any new design concepts or tools that might be used to further AI research, education, policy, and practice to benefit humans. Artificial intelligence (AI) has the ability to teach, train, and improve human performance, allowing individuals to be more productive in their occupations and hobbies. Artificial intelligence has the ability to improve human well-being in a variety of ways, including through improving food, health, water, education, and energy production. However, AI abuse might jeopardize human rights and lead to employment, gender, and racial inequality due to algorithm bias and a lack of regulation. According to Yang, et al., Human-Centered AI (HAI) refers to addressing AI from a human perspective while taking into account human events and settings. The majority of contemporary AI debates center on how AI can assist people in performing better. They did, however, evaluate how AI may block human progress and suggest a more in-depth conversation between technology and human experts to better grasp HAI from several angles.

Artificial Intelligence (AI) has enormous potential for societal advancement and innovation. It has been stated that designing artificial intelligence for humans is critical for social well-being and the common good. Despite this, the term “human-centered” is often used without referring to a philosophy or a broad methodology. Auernhammer, 2020, investigates the contributions of various philosophical theories and human-centered design techniques to the development of artificial intelligence. His study argues that humanistic design research plays an important role in cross-disciplinary collaboration between technologists and politicians in order to limit AI’s effect. Finally, using a truly human-centered mindset and methodology, human-centered Artificial Intelligence implies including humans and designing Artificial Intelligence systems for people [39].

The ability to create, narrate, understand, and emotionally respond to tales is referred to as narrative intelligence. According to Riedl (2016), incorporating computational narrative intelligence into artificial intelligences allows for a variety of human-beneficial applications. They bring forth some of the machine learning challenges that must be overcome in order to build computational narrative intelligence. Finally, they claim that computational storytelling is a step toward machine enculturation, or the teaching of social values to machines [40].

Robots and chatbots are supporting humans with algorithmic decision making and revolutionizing numerous areas. Advances in deep learning have improved AI adoption. AI must be oriented on human wants, interests, and values since it is becoming more widespread and may be considered as the new “electricity.” In order to build Human-centered AI (HAI) or Human-AI interaction solutions, HCI researchers must first create user- centered design ideas and approaches based on human factors psychology. For example, explainable AI (XAI) in algorithmic decision making is required by Bond, et al., 2019. Automation bias arises when individuals place too much trust in computer- generated suggestions. Other ethical HAI topics covered include algorithmic discrimination, ethical chatbots, and end-user machine learning. To properly calibrate human-machine trust and build a reasonable basis for future symbiosis, the foregoing problems must be addressed [41].

Online experimentation systems that allow remote and/or virtual experiments in an internet-based environment are critical in skill- enhanced online learning. An online system that encompassed the whole control engineering experimental approach was the goal of Lei, et al., 2021. The human-centered design is explored, as are the control and security-oriented designs. The system allows for interaction, configuration changes, and 3-D animations. There are also better 3-D effects like anaglyph and parallax 3-D. As a result, people may see 3-D effects on experiment equipment or in a 3-D virtual world. A twin tank system application case was also investigated to confirm the proposed system’s performance [42].

With AI’s inevitable rise, the question of “Will intelligent systems be safe for future humankind?” has gained traction. As a consequence, many scientists are working to address concerns that AI systems may become irrational or have dangerous views. In Kose and Vasant, 2017 artificial intelligence safety and future of artificial intelligence literature. They present a hypothesis for creating safe intelligent systems by determining the life-time of an AI-based system based on operational characteristics and terminating older generations of systems that seem to be safer. Their report gives a quick summary and invites more research [43].

Artificial intelligence research strives to include more intelligence into AmI settings, offering people greater assistance and access to the data they need to make better decisions while engaging with these environments. Ramos, et al., who published a special issue on AmI in 2008, takes an artificial intelligence approach to the problem [44,45].

Digital health applications are gaining popularity in the market. Clinicians are constantly assaulted with fresh data that they must swiftly process to make clinical decisions about their patients’ care. Overloading physicians with data leads to physician burnout. Using AI technologies like machine learning and deep learning to assist physicians make better judgments has also been discussed. A physician or data scientist must be engaged in the model development, validation, and deployment process. A framework for incorporating human input into the AI model should be in place using human in the loop implementation models and participatory design methodologies. A system dynamics model that emphasizes feedback loops within clinical decision-making processes is the ultimate objective of this work. Strachna and Asan, 2020 suggest using system dynamics modelling to represent tough problems that AI models can solve and illustrating how AI models would fit into current workflows.

Human-computer interface design evolves with advances in information technology and high-tech innovation, say Lili and Yanli, (2010). The fast growth of intelligence technology exposes the path of human-computer interface design. The ultimate goal of HCI design is user-centeredness. It will help user’s complete tasks while also assuring their satisfaction [46].

Human-centric future decision-making tools will rely significantly on human supervision and control. Because these systems perform vital functions on a regular basis, reliable verification is required. Heitmeyer, et al., 2015, a product of an interdisciplinary research team comprising professionals in formal techniques, adaptive agents, and cognitive science, solves this difficulty. For example, a cognitive model predicts human behavior and an adaptive agent assists the individual (e.g., formal modeling and analysis). Heitmeyer, et al. (2015) propose using Event sequence charts, a kind of message sequence chart, and a mode diagram to represent system modes and transitions. It also provides outcomes from a new pilot study that analyzed the agent design utilizing synthesized user models. Finally, in human-centric systems, a cognitive model predicts human overload. They describe a human-centric decision system for autonomous vehicle control to highlight our novel methods [47].

In the field of human-robot interaction, an experimental approach known as Wizard of Oz is often used, in which a human operator (the researcher or a confederate) remotely controls the system’s behaviour. Robots can only conduct a restricted range of pre-programmed actions while they stay self-contained during a discussion. Magyar and Vircikova, (2015) advocated using reinforcement learning to change autonomous robotic behavior during interaction while also using the benefits of cloud computing. The main objective is to make robotic behaviors more engaging and effective, in order to better prepare robots for long-term human-robot interaction [48].

The artificial intelligence period is here. Machines are already able to replicate certain human qualities due to Artificial Intelligence. Artificial intelligence conversational beings, or chat bots, are computer programs that can conduct near-natural conversations with people. Because chatbots are a common kind of Human- Computer Interaction (HCI), they are critical (HCI). Currently, the bulk of these applications are used as personal assistants. Khanna, et al., 2016 created two working chat bots in two distinct programming languages, C++ and AIML, in order to thoroughly examine their development and design processes and envision future possible advancements in such systems. They focused on AI using chat bots, addressing the framework architecture, capabilities, applications, and future scope [49].

As autonomous systems become a critical component of better decision-making in the workplace, we have the opportunity to alter human-machine interaction. In the future of business, trade, and government services, humans and AI will increasingly interact. AI systems are expanding and enhancing decision support by complementing human abilities. This collaboration requires biological human-AI teams that interact, adapt, and learn from one another. Kahn, et al., 2020 created a new human- machine teaming spectrum. They use a collection of humans and AI systems to test alternative human-AI cooperation results. They investigate at how human-machine teaming influences average handling time and response quality, both of which effect customer service. The researchers looked at human-only, AI-only, and human-AI cooperation. In the parameter space they studied, a human+ AI collaboration works best [50].

The future looks promising with the continued evolution of HTML5 and related technologies, as well as the fast expansion of mobile social apps like WeChat and Sina. There is a rush of well- designed mobile HTML5 advertising. Audio-visual, emotional, and spiritual interactivity are provided through HTML5 advertising on mobile terminals. Autonomous learning is vital in college. With the advancement of science and technology, network teaching has steadily entered the college and university classroom. The value of network teaching is represented in college and university teaching materials. Artificial intelligence will have a broad influence on education, promoting transformation. In the age of AI, Zhu, 2021 explores the influence of AI technology on education, then describes the teaching system and the interplay of learning approaches and resources [51].

Hou, 2021 is a must-read for academics, designers, developers, and all practitioners engaged in developing and implementing 21st-century human-autonomy symbiosis technology (Why). It tackles issues such as the most appropriate analytical approach for the functional requirements of intelligent systems, design procedures, implementation techniques, assessment methodologies, and trustworthiness of linkages (How). These issues were discussed using real-world examples while taking into account technological limits, human abilities and limitations, and the functionality that AI and autonomous systems should attain (When). By optimizing the interaction between human intelligence and AI, the audience gained insights into a context- based and interaction-centered design approach for developing a safe, trustworthy, and constructive partnership between people and technology. The obstacles and risks that may arise when boosting human skills with AI, cognitive, and/or autonomous systems were also studied in order to guide future research and development endeavours [52].

On the ten-year study on collective learning, Pournaras presented the outcomes of human-centered distributed intelligence in socio-technical systems. Unlike centralized AI, which allows for algorithmic discrimination and nudging, decentralized collaborative learning is designed to be participatory and value-sensitive, conforming to privacy, autonomy, fairness, and democratic values. As a result of these constraints, collaborative decision-making becomes sophisticated combinatorial NP-hard jobs. These are the difficulties that EPOS and collaborative learning seek to solve. Collective learning benefits energy, transportation, supply chains, and sharing economy self- management. Pournaras, 2020, emphasized the paradigm’s wide use and social impact [53].

Spatial cognition is important in cognitive research and in everyday life when individuals interact. It would be amazing if a robot could interact with humans like a human. Because of this, Mu, et al. 2017 constructed an intelligent robot that could speak spoken language and conduct spatial cognitive tasks like collecting tools and creating machines. The human-robot spatial cognition interaction system uses the ACT-R cognitive architecture. To summarize, Mu, et al., the spatial cognitive model included natural language recognition, synthesis, visual pattern processing, and gesture recognition. It may be utilized in six distinct spatial cognitive working settings [54].

Zhang, et al., 2020 investigated the necessity and basic connotation of implementing decision-cantered warfare, pointing out that the development of artificial intelligence and autonomous systems created the conditions for doing so, as well as the force design required to realize mosaic warfare as a decision-making centre. The command-and-control procedure has also been changed [55].

Vorm, 2020, highlighted the growing need for increased human- AI interaction research in the department of military in order to produce a cohesive and acceptable AI integration strategy in the war fighting and defence sectors [56].

Hybrid Intelligence (HI) was described by Akata, et al., 2020 as the fusion of human and machine intelligence, improving rather than replacing human cognition and capacities and reaching goals that neither humans nor computers could achieve. In order to build a research agenda for HI, they listed four problems. HI is a hot issue in artificial intelligence research these days [57].

Recently, explainable artificial intelligence (XAI) and Interpretable Machine Learning (IML) have achieved substantial academic advances (IML). Increased business and government investment, and public awareness are aiding expansion. Every day, autonomous judgments impact humanity, and the public must accept the results. A decision made using an IML or XAI application is usually explained in terms of the data used to make the decision. They seldom represent an agent’s opinions about other actors (human, animal, or AI) or the methods employed to produce their own explanation. Humans’ tolerance for AI decision-making is uncertain. Dazeley, et al., 2021 aimed to develop a conversational explanation system based on layers. They evaluated existing techniques and the integration of technologies leveraging broad explainable Artificial Intelligence for various levels (Broad-XAI) [58].

Given the ubiquitous usage of artificial intelligence in our daily lives, it’s critical to investigate how far individuals in CMC transfer “human-human interaction” concepts (such as fairness) to AI. Introduced and investigated the role of self- perceived reputation in influencing people’s fairness toward other humans and two types of artificial agents, namely artificial intelligence and random bots, in an online experiment based on a customized version of the Ultimatum Game (UG) [59]. People have a penchant for projecting real-life psychological dynamics to inanimate items, according to the researchers. Men and women behave differently when given a reputation, depending on two well-known psychological phenomena: self-perception theory and behavioral compensation, respectively [59].

Ambient assisted Living solutions that use AI and machine learning to improve the lives of the elderly are critical in smart cities. Several ambient assisted living systems are controlled via android apps. As a consequence, ambient assisted living privacy and security rely on android apps that employ privacy self- management. Mistakenly assuming that the elderly are incapable of making decisions, the privacy self-management paradigm jeopardizes their privacy and wellbeing. Elahi, et al., 2021 employed human-centered artificial intelligence to address these difficulties. Rather of relying on self-management, this strategy relies on shared accountability. In an ambient assisted living app, they developed participatory privacy protection algorithms I and II for creating appropriate privacy settings and processing runtime permission requests. They provided a case study to demonstrate the methods [60].

Krupiy, 2020 addressed the effects of artificial intelligence technology on both individuals and society. It considers the effects in terms of social fairness. Those who have already faced hardship or discrimination are given special care. The article takes university admissions as an example, where the institution utilizes entirely automated decision-making to assess a candidate’s potential. The study suggests that artificial intelligence decision- making is an institution that reconfigures interactions between individuals and institutions. Institutional components of AI decision-making systems disadvantage those who already confront discrimination. Depending on their design, artificial intelligence decision-making systems may promote social harmony or divide [61].

Di Vaio, et al., 2021 analysed the literature from the viewpoints of AI, big data, and human–AI interaction. They looked at how data analytics and intelligence may help policymakers. In their study, the authors examined 161 English-language publications published between 2017 and 2021. Aspects of the study included: preceding study contributor participation; aggregated data intelligence; and analytics research contributions. Their research focused on data intelligence and analytics. The findings show less attention devoted to the function of human–artificial intelligence in public sector decision-making. The report by Di Vaio, et al. emphasizes the necessity and usefulness of data intelligence and analytics utilization in government. Their seeks to research improves academic and practitioner knowledge of big data, AI, and data intelligence [62].

Businesses must keep up with emerging technology like AI to benefit (AI). Current AI software works well when the UX is excellent. Improve user-centric AI in applications by learning about acceptable AI technologies and leveraging their affordability. That knowledge gap might be filled by UX designers’ current experience in human-centric information architecture. To build and evaluate the e-learning approach proposed by Kolla, et al., 2021. The whole analytical procedure (DSR). It was created via semi-structured chats with six Visma AI specialists. It was analyzed using a Technology Adoption Methodology (TAM). The findings imply that e-learning will soon be utilized to build AI. This was followed by a review of the e-learning approach with visual feedback. It has improved since the first rating. Using a novel e-learning technique, this study converts organizations into AI specialists. Future research should increase AI learning [63].

Schmidt, et al., 2021 believe that three broad categories of potential technological solutions presented by Lepri, et al., 2021 are critical to developing a human-centric AI-

• Privacy and data ownership.

• Accountability and transparency.

• Justice.

They also stress the importance of bringing together interdisciplinary teams of academics, practitioners, politicians, and citizens to co-develop and test algorithmic decision-making methods that promote justice, accountability, and transparency in the real world while maintaining privacy [64].

Schmidt, et al., 2021, explore the relevance of Human-Computer Interface (HCI) in the conception, design, and deployment of human-centered Artificial Intelligence (AI). AI and machine learning (ML) must be ethical and add value to humans, both individually and collectively, for them to be successful. The benefits of combining HCI and UXD methodologies to create sophisticated AI/ML-based systems that are widely accepted, dependable, safe, trustworthy, and responsible are the focus of our discussion. AI and machine learning algorithms will be combined with user interfaces and control panels that allow for real human control [65].

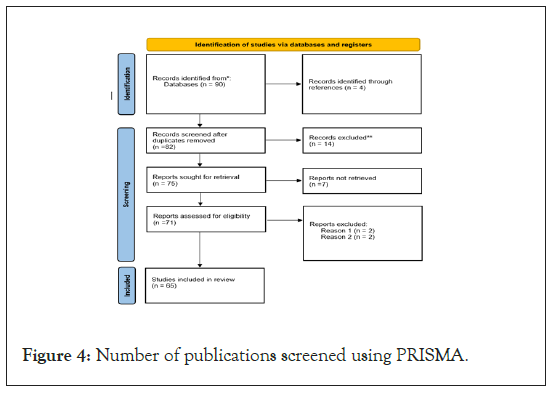

The goal of this review was to identify, evaluate, and analyse Human Centered AI papers. In september 2021, a systematic search was undertaken in five electronic databases, including Science Direct, Google Scholar, Springer, ACM Digital Library, and IEEE Xplore for material that satisfied the inclusion criteria from 1976 to 2021. Various keywords were utilized, including “Human Computer Interaction,” “Human Centered AI,” and “Human Centered Computing”. The keywords were merged utilizing Boolean functions of ‘AND’, ‘OR’, and ‘NOT’ for a high-quality search technique. Journals and articles that have been peer-reviewed were considered. The inclusion and exclusion criteria were created after extensive debate among the authors and based on the study’s goal. The preliminary selection was made by reading through the titles, abstracts, and keywords to find records that met the inclusion and exclusion criteria. Duplicates were removed, and publications that appeared to be relevant based on the inclusion and exclusion criteria were read in full and reviewed. Other relevant publications were found utilizing the accepted literature’s reference list. The article selection and screening were reported using the preferred reporting items for systematic reviews and meta-analysis flow diagram.

Inclusion and exclusion criteria

The paper must be about a human centered AI and its applications for it to be included in the review. Any work that fell outside of the above-mentioned scope, including literature written in languages other than English, was omitted.

Data collection and organization

The collection of data and categories were based on objective and comprehensive literature reviews as well as author conversations. The following categories have been created only for the purpose of assessing, analysing, and evaluating the study:

Category of HAI

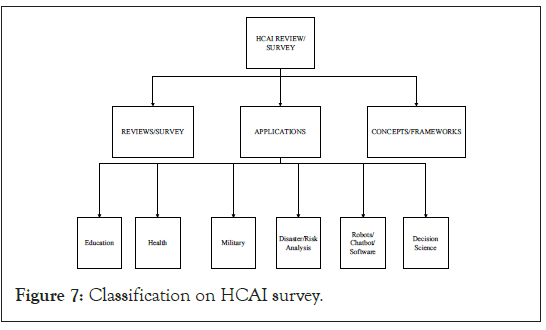

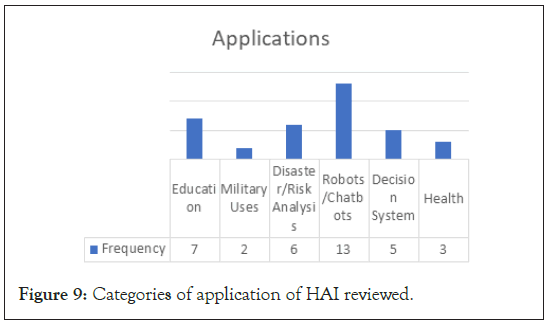

The categories were according to the criteria; reviews/surveys, applications and concepts/frameworks. The applications were sub-categorized into education, health, military, disaster/risk analysis, robots/chatbots/software, decision science.

Electronic database: The electronic databases or sources include ACM Digital Library, Google Scholar, IEEE Xplore, Science Direct, Springer.

Year of publication: This category specifies the year range of publication of the articles.

Literature Evaluation and analysis

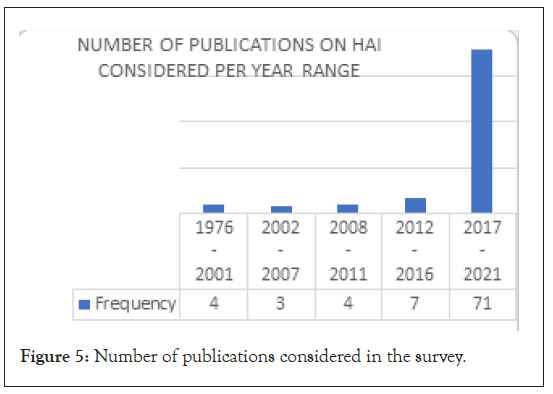

Based on the above-defined categories, the selected papers were assessed, studied, and evaluated. To evaluate the human centered AI, an analysis was performed on each of the categories (category, electronic database, and year of publication). The total number of counts (n) of each type of attribute was used to compute the percentages of the attributes of the categories. Because some studies used multiple categories, the total number of articles presented in the study was lesser than the number of counts for these categories. We found 94 publications after searching multiple web databases and reviewing titles, abstracts, and keywords. After scanning the goal, method, and conclusion portions of these publications, 14 articles were eliminated. After removing duplicates, 82 products were assessed and scored. As shown in Figure 4, the investigation and analysis comprised 65 publications after full-text reading (mainly based on a review of Human Centered AI) (Figure 5).

Figure 4: Number of publications screened using PRISMA.

Figure 5: Number of publications considered in the survey.

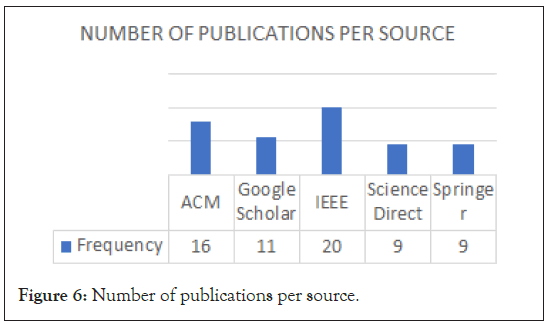

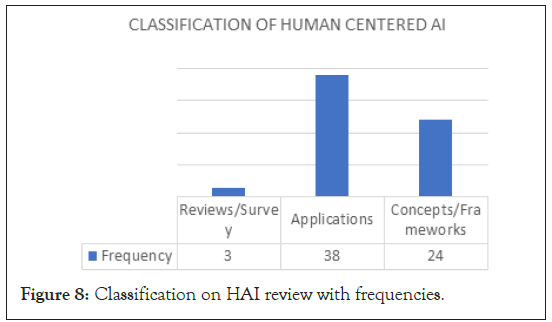

The survey centered on selected research publication databases based on the unique keyword “Human Centered AI”. With the motive of understanding the various strides made in this area, the year range considered included all relevant publications till date. The oldest publication found was in the year 1976 with the latest being in 2021. As Figure 4 depicts, the later years have seen a rise in publications regarding human-centered AI. The databases considered were-ACM Digital Library, Google Scholar, IEEE Xplore, Science Direct and Springer (Table 4). The final number of selected publications or journals are summarized in the bar graph in Figure 6. From careful observation and analysis, we classified the journals considered under the following categories as illustrated in Figure 7, Table 5. As Figure 7 depicts the main categories after the survey were reviews/surveys, applications and concepts. Figure 8 illustrates the frequencies established with respect to this initial categorization. The applications categories were sub-categorized into various areas per the evaluation that was undertaken as Figure 9 depicts.

| Source | Frequency | |

|---|---|---|

| ACM | [25][29][32][35][37][31][24][22][27][23][26][30][28][36][34][33] | 16 |

| Google scholar | [40][41][1][39][8][6][38][3][15][16][4] | 11 |

| IEEE | [44][46][47][48][49][43][54][50][57][45][55][53][56][51][52][42][7][11][12][5] | 20 |

| ScienceDirect | [61][60][59][62][63][58][64][10][14] | 9 |

| Springer | [19][21][65][20][18][2][9][13][17] | 9 |

Table 4: Data sources and frequency.

| Literature classification | Total number of papers |

|---|---|

| Reviews/Surveys | 3 |

| Applications | 38 |

| Concepts/Frameworks | 24 |

Table 5: Classification on HCAI survey.

Figure 6: Number of publications per source.

Figure 7: Classification on HCAI survey.

Figure 8: Classification on HAI review with frequencies.

Figure 9: Categories of application of HAI reviewed.

This paper agrees to the notion that there is an intrinsic need to balance human involvement with increasing computer automation, which is relevant in achieving fair, just and reliable systems especially in the era of chatbots and other AI systems. AI development must be properly performed. The study evaluated how AI may block human progress and suggest a more in- depth conversation between technology and human experts to better grasp HAI from several angles. To summarize, research institutions, governments, and other relevant authorities must carefully craft laws and norms to control AI operations.

Human-centered AI, a new subject, offers many research possibilities to increase usability and assure the creation of fair, just, and reasonable AI systems. Listed below are some of our suggestions.

1. The 2-dimensional autonomy framework by could further be extended to have a third or fourth dimension to give room for specificity of an AI system.

2. To avoid the feared uncontrolled AI autonomy, multiple research approaches might be combined into one worldwide standard to guide future advancements.

3. The COVID-19 epidemic necessitates e-learning solutions that use UX and HAI concepts. Researchers might develop frameworks that combine these basic ideas to construct fair, efficient, effective, and just e-learning systems.

4. Data analytics and decision sciences demand an organized knowledge of significant patterns in massive data sets. Adding HAI concepts here will likely result in better human-centered data models that are simple to grasp and analyses.

[Crossref] [Google scholar] [Pubmed]

[Crossref] [Google scholar] [Pubmed]

[Crossref] [Google scholar] [Pubmed]

[Crossref]

Citation: Domfeh EA, Wayori BA, Appiahene P, Mensah J, Awarayi NS. (2022) Human-Centered Artificial Intelligence: A Review. Int J Adv Technol. 13:202.

Received: 28-Jul-2022, Manuscript No. IJAOT-22-17679; Editor assigned: 02-Aug-2022, Pre QC No. IJAOT-22-17679(PQ); Reviewed: 16-Aug-2022, QC No. IJAOT-22-17679; Revised: 23-Aug-2022, Manuscript No. IJAOT-22-17679(R); Published: 30-Aug-2022 , DOI: 10.35248/0976-4860.22.13.202

Copyright: © 2022 Domfeh EA, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.