Journal of Psychology & Psychotherapy

Open Access

ISSN: 2161-0487

ISSN: 2161-0487

Short Communication - (2024)Volume 14, Issue 4

Identifying Autism Spectrum Disorder (ASD) is challenging due to its complex and varied nature, making early detection important for effective intervention. Recently, there has been considerable discussion about using deep learning algorithms to improve ASD diagnosis through neuroimaging data analysis. To address the limitations of current techniques, this research introduces an innovative approach called the Multi-View United Transformer Block of Graph Convolution Network (MVUT_GCN). MVUT_GCN leverages the benefits of multi-view learning and convolution processes to extract subtle patterns from structural and functional Magnetic Resonance Imaging (MRI) data. A comprehensive analysis using the Autism Brain Imaging Data Exchange (ABIDE) dataset demonstrates that MVUT_GCN outperforms the existing Multi View Site Graph Convolution Network (MVS_GCN), achieving a +3.44% improvement in accuracy. This enhancement highlights the effectiveness of our proposed model in identifying ASD. Improved accuracy and consistency in ASD diagnosis through MVUT_GCN can facilitate early intervention and support for ASD patients. Additionally, MVUT_GCN's interpretability bridges the gap between deep learning models and clinical insights by aiding in the identification of biomarkers associated with ASD. Ultimately, this work advances our understanding of ASD and its practical management, with the potential to improve outcomes and quality of life for those affected. Understanding of ASD and its practical management, with the potential to improve outcomes and quality of life for those affected.

Graph convolution network; Neuroimaging; Autism spectrum disorder; Autism Brain Imaging Data Exchange (ABIDE); Alzheimer's disease

Autism Spectrum Disorder (ASD) exemplifies the complexity of the human mind, with its wide range of symptoms and behavior’s making diagnosis a nuanced and challenging task. This article explores the intricacies and obstacles associated with identifying ASD, highlighting the necessity of understanding its diverse manifestations. One of the primary challenges in diagnosing autism stems from its spectrum nature. The term "spectrum" signifies the vast variability in symptom type and severity among individuals with ASD. While some may display repetitive behaviors and social interaction difficulties, others might possess exceptional talents alongside social challenges. This variability often delays diagnosis, as individuals may not conform to predefined criteria. Gender differences in ASD presentation present another significant challenge. Historically, diagnostic criteria and research have been predominantly based on male-centric observations, potentially missing the subtleties of how autism manifests in females. Consequently, females with ASD may be underdiagnosed or diagnosed later due to a lack of awareness regarding gender-specific nuances. SD frequently cooccurs with other developmental, psychiatric, or neurological conditions, creating overlapping symptoms that complicate diagnosis. Differentiating between the core features of autism and those of comorbid conditions requires meticulous evaluation. The presence of overlapping symptoms often demands that clinicians navigate a complex web of potential contributing factors. The dynamic nature of human development adds further complexity to the diagnostic process. ASD symptoms can evolve over time, and some individuals may develop compensatory mechanisms that obscure early signs. This developmental variability necessitates a longitudinal diagnostic approach, considering the evolving nature of behavior, communication, and social interactions. Cultural and socioeconomic factors also influence ASD diagnosis. Variations in cultural norms, expectations, and access to healthcare services can affect both the recognition of symptoms and the likelihood of seeking a diagnosis. This underscores the importance of a culturally sensitive diagnostic approach that respects diverse perspectives and experiences. In diagnosing autism spectrum disorder, it becomes clear that a one-size-fits-all approach is insufficient. The spectrum nature of ASD, gender disparities, overlapping symptomatology, developmental variability, and cultural factors all emphasize the need for a comprehensive and individualized diagnostic process. As we unravel the complexities of autism, it is important to continually refine diagnostic methodologies to ensure early and accurate identification, prepare for timely interventions and support. Early and accurate diagnosis of Autism Spectrum Disorder (ASD) is important for effective intervention and support, shaping the developmental trajectory of individuals with ASD. Identifying ASD early harnesses the critical stages of neural plasticity, enabling targeted interventions that optimize cognitive, social, and communicative development. Personalized strategies modified to each individual's unique profile maximize intervention effectiveness, providing a customized roadmap for growth. In educational settings, early diagnosis empowers educators to implement specialized methodologies and individualized support plans, transforming classrooms into supportive environments. For families, early identification provides a clear understanding of their child's needs, access to essential resources, and the ability to foster a nurturing and proactive environment. Addressing coexisting conditions and behavioural challenges early on mitigates their impact, promoting emotional well-being for individuals with ASD and their families. Economically, early intervention reduces the need for more intensive and costly treatments later, alleviating longterm economic burdens and fostering a more inclusive society. In the narrative of autism, early diagnosis is a catalyst for positive change, dismantling barriers and revealing the untapped potential within each person on the spectrum. It is the basis of effective intervention and unwavering support, guiding individuals with ASD toward a future of limitless potential.

Functional Magnetic Resonance Imaging (fMRI) is increasingly important in autism diagnosis due to its ability to reveal neural activity and connectivity. Unlike structural imaging, fMRI captures blood flow changes linked to neural activity, allowing visualization of how brain regions communicate during tasks or at rest. This aids in mapping functional brain networks and identifying atypical connectivity patterns in autism. Researchers are identifying neural biomarkers through fMRI, seeking patterns that distinguish individuals with autism from neurotypical individuals, which can enhance diagnostic accuracy. Task-based fMRI studies examine brain activation during specific tasks, highlighting differences in social interaction, communication, and sensory processing. Restingstate fMRI, examining brain activity at rest, reveals intrinsic connectivity alterations in autism. fMRI provides objective, quantitative brain activity measurements, reducing reliance on subjective behavioral assessments. This objectivity is important for individuals with subtle behavioral manifestations or communication difficulties. fMRI studies also help identify subtypes within the autism spectrum by revealing distinct neural signatures, aiding personalized interventions. Advances in data analysis, including machine learning, enhance the extraction of meaningful information from fMRI data, contributing to more accurate diagnostic models. fMRI holds potential for early autism detection, potentially identifying abnormalities before behavioral symptoms appear, important for early intervention. Combining fMRI findings with behavioral assessments offers a comprehensive understanding of autism, improving diagnostic accuracy and intervention strategies. Machine learning algorithms applied to fMRI data are uncovering complex neural biomarkers for autism. These data-driven approaches identify alterations in resting-state and task-based functional connectivity, enhancing diagnostic accuracy. Combining fMRI with other imaging modalities and focusing on specific brain regions provides objective, quantifiable measures, reducing diagnostic delays and variability.

Functional Brain Networks (FBN) have garnered significant attention for diagnosing neurological disorders such as Autism Spectrum Disorders (ASD). Accurate classification is challenging due to noisy correlations in brain networks and significant subject heterogeneity. The MVS-GCN model combines graph neural networks to achieve efficient end-to-end representations for brain networks. By integrating multi-view graph convolutional neural networks with prior knowledge of brain anatomy, this approach aims to enhance classification performance and identify potential functional subnetworks. The authors [1], evaluated the MVS-GCN model using the Alzheimer's Disease Neuroimaging Initiative (ADNI) and Autism Brain Imaging Data Exchange (ABIDE) datasets, demonstrating its superiority over existing methods. Inspired by this concept, a new Multi-View United Transformer Block (MVUTB) was introduced to integrate views in a unified form. The proposed model also enhances class discrimination performance using a graph convolution network.

Related work

The study by Wen, et al. [1], presents the MVS-GCN, a multiview graph convolution network aimed at improving Autism Spectrum Disorder (ASD) diagnosis. By incorporating prior knowledge of brain anatomy, the model leverages multi-view graph convolutional neural networks to enhance the representation and classification of brain networks. The MVSGCN [1] is designed to tackle noisy correlations and subject heterogeneity, common challenges in neurological disorder diagnosis. Using datasets from the Alzheimer's Disease Neuroimaging Initiative (ADNI) and Autism Brain Imaging Data Exchange (ABIDE), the model demonstrated superior performance over existing methods, highlighting its potential for more accurate and interpretable ASD diagnosis. The key insights are the novel MVS-GCN architecture combining graph structure learning, multi-view embedding, and prior knowledge incorporation to improve ASD diagnosis from functional brain networks while providing interpretable subnetwork biomarkers. The graph structure learning constructs cleaner and more consistent brain networks across subjects. The multi-view embedding captures correlations among different sparse network levels through a shared layer and view consistency regularization. The prior subnetwork regularization enhances representation of critical ASD-related subnetworks like salience and default mode networks. Experiments on ABIDE show MVSGCN outperforms state-of-the-art, and identified subnetworks align with previous ASD neuroimaging findings. The experimental results on the ABIDE dataset show that MVSGCN achieves an average accuracy of 69.38% The authors propose Autism Connect [2], a GCN model that combines local and global GCNs to identify ASD from resting-state fMRI data. The local GCN captures local functional connectivity patterns, while the global GCN captures global brain network topology. Autism Connect achieves 71.76% accuracy on the ABIDE dataset, outperforming traditional machine learning methods. It identifies discriminative brain regions (superior frontal gyrus, middle temporal gyrus, fusiform gyrus) and connections associated with ASD, providing insights into the disorder's neurobiological basis. The authors demonstrate Autism Connect's interpretability by visualizing learned graph filters highlighting local connectivity patterns distinguishing ASD from controls. The paper proposes GCN-ASD [3], a GCN model that combines node-wise and graph-wise convolutions to capture local and global topological information from resting-state fMRI data for ASD diagnosis. GCN-ASD is trained end-to-end to jointly learn functional connectivity representations and perform ASD/normal classification. It achieves 72.22% accuracy on the ABIDE dataset, outperforming traditional methods using hand-crafted features. GCN-ASD identifies discriminative connectivity patterns, implicating regions like the superior frontal gyrus, middle temporal gyrus, and fusiform gyrus. It reveals hypoconnectivity within the default mode network and hyperconnectivity between default mode and attention networks in ASD, consistent with prior findings. Brain Graph Neural Network Autism Spectrum Disorder (Brain GNNASD) [4], is a GCN framework that jointly learns Regions of Interest (ROI) clustering and performs ASD/normal classification from rsfMRI data. It uses graph convolutions to capture local functional connectivity patterns around ROIs, followed by grouping ROIs into meta-nodes. The learned meta-node embedding’s are fed into a fully connected layer for classification. On the ABIDE dataset, Brain GNNASD achieves 70.37% accuracy for ASD diagnosis, outperforming baselines using connectivity features. It identifies discriminative functional networks like the default mode network associated with ASD by visualizing the learned ROI clusters. Brain GNNASD provides interpretability by highlighting important ROIs and connections contributing to ASD classification. Brain GCN [5], is a GCN framework that takes functional connectivity matrices from rs-fMRI data as input and learns a graph embedding capturing discriminative connectivity patterns for ASD identification. It consists of a spatial graph convolution layer extracting local connectivity patterns, followed by a temporal convolution layer capturing dynamic information. Brain GCN is end-to-end trainable, jointly learning the graph embedding and performing ASD/normal classification. On the ABIDE dataset, it achieves 70.63% accuracy for ASD diagnosis, outperforming baselines using hand-crafted connectivity features. Brain GCN visualizes learned graph filters, highlighting discriminative functional connections and regions like the default mode network associated with ASD, providing interpretability into disrupted brain connectivity.

GCN-ASD is a GCN framework [6], which takes functional connectivity matrices from rs-fMRI data as input and learns a graph embedding capturing discriminative connectivity patterns for ASD identification. It consists of a spatial graph convolution layer extracting local connectivity patterns, followed by a global pooling layer aggregating these into a graph-level representation. GCN-ASD is end-to-end trainable, jointly learning the graph embedding and performing ASD/normal classification. On the ABIDE dataset, it achieves 70.37% accuracy for ASD diagnosis, outperforming baselines using hand-crafted connectivity features. GCN-ASD visualizes learned graph filters, highlighting discriminative functional connections and regions like the default mode network associated with ASD, providing interpretability into disrupted brain connectivity. Dynamic Graph Convolution Network (DynGCN) [7], is a GCN framework that captures static and dynamic functional connectivity patterns from rs-fMRI data for ASD classification. It incorporates a temporal graph convolution layer to model dynamic connectivity changes over time, in addition to a spatial layer capturing topological brain network structure. DynGCN is end-to-end trainable, jointly learning spatial and temporal graph embedding’s. On the ABIDE dataset, it achieves 72.22% accuracy for ASD diagnosis, outperforming baselines using static connectivity features. DynGCN visualizes learned graph filters, highlighting discriminative functional connections and regions like the default mode network associated with ASD, providing interpretability into disrupted dynamic brain connectivity patterns.

Important points of GCN based ASD identification:

Graph Convolutional Networks (GCN): Uses GCNs, a form of neural network built for graph-structured data, to capture complicated interactions within brain connectivity networks generated by neuroimaging data.

Autism Spectrum Disorder (ASD) recognition: The use of GCNs to the recognition and classification of Autism Spectrum Disorder (ASD) using characteristics collected from neuroimaging datasets.

Position-aware model (PLSNet): PLSNet [8], is a position-aware GCN-based model that was developed to address issues in modeling brain neuronal connections, node configuration changes, and dimensionality explosion in fMRI data.

Feature learning: Uses context-rich feature extraction approaches, such as time-series encoding and function connectivity creation, to capture detailed patterns associated with autism in brain function connection.

Position embedding: Uses position embedding techniques [9], to distinguish brain nodes depending on their location, improving the model's capacity to discriminate differences across brain regions.

Dimensionality reduction: Uses a rarefying mechanism during message diffusion to sieve salient nodes, successfully decreasing dimensionality complexity caused by too many voxels in each fMRI sample.

Performance evaluation: Shows cutting-edge performance in ASD detection, with excellent accuracy and specificity on benchmark datasets such as the Autism Brain Imaging Data Exchange and the CC200 Atlas [10].

Interpretability: Prioritizes interpretability by identifying key brain areas, which provide insights into possible biomarkers for ASD clinical diagnosis.

Cross-attention mechanism: Uses a transformer-based cross- attention mechanism [11], to combine characteristics gathered from several levels, which improves the model's capacity to forecast brain age and identify age-related brain illnesses.

Multi-level information fusion: A unique method for predicting brain age by combining information collected from original 3D MRI images, chosen 2D slices, and volume ratios of distinct brain areas using neural networks and a transformer-based mechanism.

The multi-view united transformer block with GCN is a novel architecture proposed for multi-view learning tasks, such as node classification or graph classification. It aims to effectively integrate information from multiple views (modalities or feature sets) while leveraging the power of both transformer and graph convolutional networks.

Key components of this architecture

Multi-view attribute graph convolution encoders: These encoders map the multi-view node attribute matrices and graph structures into a graph embedding space. Each view has its own encoder pathway that applies graph convolutions to capture the local neighborhood information.

Attention mechanism: An attention mechanism is employed to reduce noise and redundancy in the multi-view graph data by assigning appropriate weights to different views.

Consistent embedding encoders: These encoders extract consistency information among the multiple views by exploring the geometric relationships and probability distribution consistency across different views.

Multi-view united transformer block: This is the core component that integrates the multi-view information using a transformer-based architecture. It consists of multi-head self- attention layers and feed-forward layers, allowing for effective information exchange and fusion across different views.

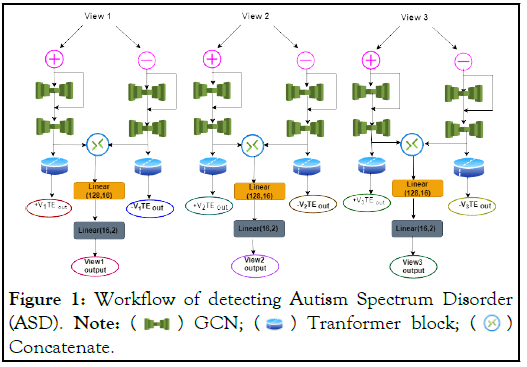

Reconstruction: The architecture includes reconstruction components to reconstruct the node attributes and graph structure, improving the toughness of the learned representations (Figure 1).

Figure 1: Workflow of detecting Autism Spectrum Disorder

(ASD). Note:  GCN;

GCN;  Tranformer block;

Tranformer block;  Concatenate.

Concatenate.

The multi-view data (e.g., node attributes, graph structures) from different views are first processed by the respective multi-view attribute GCN Encoders. The encoded representations are then passed through the consistent embedding encoders to extract cross-view consistency information. Finally, the multi-view united transformer block integrates and fuses the multi-view representations using self-attention and feed-forward layers, allowing for effective information exchange and learning of a unified representation.

Algorithm for detecting ASD using MVUT_GCN

Step 1: GCT-1 process

GCT_1_Output=GCT_1 (+V)

Step 2: Addition of Residual

Residual_Addition=GCT_1_Output + (+V)

Step 3: GCT-2 process GCT_2_Output=GCT_2 (Residual_Addition)

Step 4: GCT-2 layer concatenation

GCT_2_Output_(+V), GCT_2_Output_(-V)=Concatenate

(GCT_2_Output(+V), GCT_2_Output(-V))

Step 5: Linear layer application

View1_Intermediate=Linear (GCT_2_Output (+V), 128, 16)

View1_Output=Linear (View1_Intermediate, 16, 2)

This architecture combines the strengths of graph convolutional networks for capturing local neighborhood information and transformers for global information integration across multiple views. The attention mechanism and reconstruction components further enhance the toughness and quality of the learned representations. Figure 1, represents the workflow for detecting ASD. Each view starts with a positive (+) and negative (-) input, represented by pink circles at the top. The green dumbbell-shaped icons represent Graph Convolutional Network (GCN) layers. Each view has two GCN layers in sequence. The outputs from the positive and negative branches within each view are concatenated, represented by a circle with an "X" inside. After concatenation, each view passes through a transformer block, depicted by a blue cylindrical shape. A linear layer (128,16) is applied after the transformer block in each view. Each view produces two output tensors, labelled as ± V1TE, ± V2TE, and ± V3TE for Views 1, 2, and 3 respectively. The last step in each view is another linear layer (16, 2). The final outputs for each view are labelled as View1 output, View2 output, and View3 output.

Algorithm for MVUT based detection:

Input:

+ViTE out, +Vi+1TE out, +Vi+2TE out (positive temporal encodings)

-ViTE out, -Vi+1TE out, -Vi+2TE out (negative temporal encodings)

1. Positive path processing:

a. X1=MVUTB (+ViTE out, +Vi+1TE out)

b. X2=MVUTB (X1, +Vi+2TE out)

c. FlattenPos=Flatten (X2)

2. Negative path processing:

a. Y1=MVUTB (-ViTE out, -Vi+1TE out)

b. Y2=MVUTB (Y1, -Vi+2TE out)

c. FlattenNeg=Flatten (Y2)

3. Concatenation:

ConcatOutput=Concatenate (FlattenPos, FlattenNeg)

4. Linear transformations:

a. Z1=Linear (ConcatOutput, 384, 32)

b. Z2=Linear (Z1, 32, 16)

c. Output=Linear (Z2, 16, 2)

Return: Output

Function MVUTB (input1, input2):

// Multi-view unified transformer block

// Implement the MVUTB operations here

return processed_output

Function Flatten(input):

// Flatten the input tensor

return flattened_output

Function Concatenate (input1, input2):

// Concatenate two input tensors

return concatenated_output

Function Linear (input, input_size,

output_size): // Apply linear transformation

return linear_output

The MVUT-based detection algorithm processes positive and negative temporal encodings through a series of operations. It begins with Multi-View Unified Transformer Blocks (MVUTB) applied to both positive and negative paths, followed by flattening the outputs. The flattened results are then concatenated and passed through three linear transformations, progressively reducing dimensionality. The algorithm uses custom functions for MVUTB processing, flattening, concatenation, and linear transformations. This process transforms complex multi-dimensional inputs into a final 2- dimensional output, effectively combining and distilling information from both positive and negative temporal encodings for detection purposes.

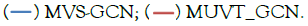

The experimental findings demonstrate the effectiveness of the Multi-View United Transformer Block of Graph Convolution Network (MVUT_GCN) in detecting Autism Spectrum Disorder (ASD). Utilizing neuroimaging data from the ABIDE dataset, including both structural and functional MRI, the model shows superior performance in identifying subtle patterns associated with ASD. MVUT_GCT outperforms previous methods, such as MVS-GCN [1], with a notable accuracy improvement of +3.44% which is depicted in Figure 2. The incorporation of convolution mechanisms and multi-view learning enhances the model's ability to capture complex relationships within brain networks. Furthermore, the model's interpretability is improved through node-selection pooling layers, which help identify important Regions of Interest (ROIs) in the brain, aligning with previous neuroimaging studies. These advancements underscore the potential of MVUT_GCT to revolutionize ASD early detection and diagnosis, potentially leading to more timely interventions and improved outcomes for individuals with ASD.

Figure 2: Performance for different number of super nodes of

coarsened graphs. Note:

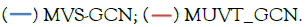

The impact of varying the number of views (V) on model performance was investigated by testing values from 1 to 6. As illustrated in Figure 3, classification accuracy improved as the number of views increased, reaching its peak at 3 views. This suggests that different views capture complementary topological information, enhancing the model's ability to distinguish between classes. However, the performance plateaued and slightly declined beyond 3 views, indicating that additional views may introduce redundant information or noise to the model. This observation implies there is an optimal number of views that balances the trade-off between capturing diverse topological features and avoiding overfitting due to excessive, potentially redundant input data.

Figure 3: Performance variation as the number of views

increases. Note:

The identification of Autism Spectrum Disorder (ASD) is poised to advance with the incorporation of deep learning models, such as the multi-view united transformer block of graph convolution network. These algorithms' ability to automatically detect subtle abnormalities in multimodal neuroimaging data enhances diagnostic precision and facilitates early identification of ASD. By including convolution mechanisms, interpretability is improved, bridging the gap between clinically applicable findings and complex models. Continued research should focus on refining architectures, optimizing models, and exploring hybrid approaches to enhance the robustness and generalizability of ASD diagnostic models as deep learning evolves. Future studies may also investigate integrating other data sources, such as genetic markers and behavioral assessments, to deepen our understanding of ASD's neurological foundations. These advancements could revolutionize early diagnosis and intervention strategies, leading to better outcomes for individuals with ASD worldwide.

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

Citation: Jemima DD, Selavarani G, Lovenia JDL (2024) MVUT_GCN: Multi-View United Transformer Block-Graph Convolution Network for Diagnosis of Autism Spectrum Disorder. J Psychol Psychother. 14:485.

Received: 22-Jul-2024, Manuscript No. JPPT-24-33080; Editor assigned: 24-Jul-2024, Pre QC No. JPPT-24-33080 (PQ); Reviewed: 07-Aug-2024, QC No. JPPT-24-33080; Revised: 14-Aug-2024, Manuscript No. JPPT-24-33080 (R); Published: 21-Aug-2024 , DOI: 10.35841/2161-0487.24.14.485

Copyright: 2024 Jemima DD, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited