Journal of Proteomics & Bioinformatics

Open Access

ISSN: 0974-276X

ISSN: 0974-276X

Research Article - (2019) Volume 12, Issue 1

In cases with sensory processing dysfunctions like autism spectrum disorder (ASD) and cognitive decline like Alzheimer's disease (AD), optimistic neurological improvements have been recorded after systematic sensorimotor enrichment stimulation and art therapeutic procedures. While art therapy may offer a cognitive-behavioral breakthrough, several latest studies have explored the effectiveness of brain signals representations through audio and visual transformations of electroencephalography (EEG) measurements and the necessity of neurological evaluation of sensory integration therapies. In this technical report, new software based on the JAVA and processing programming language is presented, that detects, displays and analyzes EEG signals for individual or a group of patients. This neuroinformatics software offers a digital painting environment and a real time transformation of EEG signals into adjustable music volume and octave configuration per electrode, for the real time observation and evaluation of therapeutic procedures. The EEG acquisition is wireless, therefore, brain data can also be collected from the application of other sensory or sensorimotor therapeutic sessions as well. The Neurocognitive Assessment Software for Enrichment Sensory Environments (NASESE) includes two main functionalities, organized in five different modules. The first functionality includes recording, filtering and visualization of the EEG signals exported to a rotating 3D brain model and a real-time transformation of brain activity to sound sculptures, while the second functionality generates statistical tests and coherence calculation in a fully customizable computerized environment.

Keywords: Aging; Alzheimer's disease; Art therapy; Autism spectrum disorders; Brain waves; Central nervous system diseases; Dementia; Eclipse programming language; Electroencephalography; Enrichment sensory environments; Java; Processing programming language

For years, scientists have been seeking alternative solutions to successfully manage disorders concerning the central nervous system (CNS) based on the theory of brain plasticity in Parkinson's Disease (PD) and ASD [1-3] and the relationship between the gut microbiota and the CNS in ASD [4] or by using statistical tools for the early diagnosis and prediction of AD [5-8]. In this direction, EEG systems were invented for recording electrical activities on the scalp surface to reach conclusions for the functionality of the human brain [9-11]. From its very existence till today, EEG testing is the most common and simple imaging method for the identification of electrophysiology abnormalities in the human brain and their correlation to ASD and other related disorders. Although EEG cannot reveal the etiology of the diseases and does not give the necessary background for holistic treatment formulas, it offers an efficient measurement of signals from the cerebrum of the patient due to the recent significant improvements in EEG resolution capabilities [12]. While neurodegenerative disorders in most cases remain undetectable and hardly curable, scientists often apply not-invasive therapeutic procedures or medications like cognitive-behavioral therapy and art therapy [13]. Art therapy in dementia can be defined as a process that leads to the cognitive and mental treatment of the patient using artistic tools and mediums [14], engaging attention, providing pleasure, improving behavior and communication and reducing anxiety, agitation and depression [15]. In cases with AD for example, watercolor can be used to promote the freedom of expression or encourage personal choice and control [16] while sensory integration therapy involving vestibular, proprioceptive, auditory and tactile inputs using brushes, swings and balls, are often used to treat children with autism [17-20]. Initially, art therapy was psychologically oriented, but recently researchers started seeking for correlations with other scientific fields [14]. Although art therapy techniques vary, a transference relation between the patient and the therapist is required [21]. In its primary form, art therapy methods were established on the basis of a dynamic interaction between two persons (patient and clinician), while the patient creates art to be evaluated. In the traditional expressive therapy, there is usually no computational device or software involved in the procedure, but even if such media are present, they are not being actively used in all of the therapeutic phases. Several published studies demonstrate the combination of enriched sensorimotor stimulations with art therapy in patients with neurodegeneration for the improvement of mood, memory and for reducing agitation and apathy [22-26]. While enhanced environmental stimulation improves dendritic branching and synaptic density resulting in neurogenesis [27,28], many improvements in patients’ behavior were achieved so far, even though several years of training and invigilation are required for positive achievements in patients with ASD [29] dementia or other brain lesions. Recent studies have also shown that the use of art therapy results in significant differences in the EEG measurements [30]. The evaluation of art therapy programs, mainly includes quantitative analysis of participants’ evaluation based on questionnaires, expectations achieved, likes and dislikes [31-33]. It seems that new automated computational methods are needed to evaluate the efficacy of cognitive-behavior therapy methods with a neurological base, in order to further reduce the educational and treatment time and provide the opportunity of implementing effective techniques and obtaining repetitive results. The neuroinformatics software NASESE is presented in this technical report and described in the following sections, focused to a non-invasive behavioral analysis and art therapy. Even though EEG data acquisition is strongly related with the EEG machines [34], the core functionality of this software includes brain signals’ analysis and visualization and neurological testing of participants in enrichment environments and art therapy sessions regardless of the equipment.

Software description

The software is coded in the computer programming language Processing and some parts are created in pure JAVA. Multiple libraries were designed and coded from scratch concerning sound, graphics and serial communication between the EEG devices and computer. The final project was debugged in the integrated development environment Eclipse and extracted as a JAVA executable application. The code, the required libraries of the software and the basic instructions are uploaded on github (https://github.com/nasese). The inclusion of NASESE software in a therapeutic session involves a neurologist, a therapist and the patient who continuously exchange data within networking electronic devices and an EEG portable device (Figure 1). The software consists of five modules determined as 'Brain Visualization', 'Spectrogram', 'Colors', 'Sounds' and 'Statistics' (Figure 2). In one of its embedded functionalities, the neurologist executes the 'Colors' module of the software in the first computer, initializing it in the second computer, and the therapist starts the therapeutic protocol in the computerized enrichment environment. With many possibilities for parameterization of filters, frequencies thresholds and statistical tests, EEG data are stored, analyzed and further processed by the doctor. While EEG acquisition is wireless, data are collected from the application of other sensory, sensorimotor and artistic therapeutic protocols. All the executed tests and the exported results are digitally saved and can be accessed for further research at any time in future by the therapists or the medical staff.

Data collection and processing

The current version of the software is programmed to function with 16-channel EEG devices based on the 10/20 system (Figure 3), which can be connected either directly or by using a Bluetooth module with the computer. At this stage the software was tested on the 32bit OpenBCI Board, constructed for research purposes only [35] equipped with the Microchip PIC32MX250F128B microcontroller and operated by a compatible personal computer with an average performing configuration (Intel(R) Core(TM) i7 series Intel Processor 2.2 GHz, RAM 6GB, AMD High-Definition Graphics Driver Version 8.882.2.0 / NVIDIA GeForce 10 series version 3.1.0.52 and a sound card, Operating System Windows 7,8,10 series). Initially, the EEG device identifies electrical activity on the surface of the patient's scalp and transforms it into bytes. Then, the data are sent to the computer as digital information. The software transforms the received data again into uVolts (uV) for further processing. Afterwards, applying bandpass low-frequency filters of 0.3Hz, a high-frequency filter of 30Hz and notch filters of 50Hz, the signals can be further utilized by the software modules. The user can adjust the notch and bandpass filters in the top menus [34,36,37]. More specific 50/60 Hz notch filters are available and options for bandpass filters of 1-50, 7-13, 15-50, 5-50, 0.30-30 Hz as well. Under the spectrograms, an array showing the uV amplitude of each electrode in real time exists with a Fast Fourier Transform (FFT) plot. The FFT plot is created using functions, objects and classes from the minim library of processing programming language. EEG signal includes artifacts such as eye and muscle-generated artifacts, highamplitude artifacts and signal discontinuities [38]. Therefore using a wavelet-thresholding approaches the software process, analyze and display data with no eye-blink artifacts. In general artifacts that probably result of abnormal behavior are not excluded at this version, even though users can apply external sources [36] to remove these artifacts. While our primary goal is to use the software for art and behavioral therapy mainly in ASD patients, latest studies has clinical demonstrated that behavioral characteristics might introduce artifacts into the EEG signals which are very difficult to be identified in young ASD patients [37]. Also while latest studies concluded to the inefficient classification of ASD patients based only on EEG signal analysis [39], it seems that the calculation and study of the coherence differences between children with ASD and healthy controls could be a potential solution. For these reasons, this first version of the software gives priority to the statistical tests and the calculation of the coherence differences as a potential way of revealing patterns and a future way of ASD classification and early diagnosis and the use of the software as a non-invasive behavioral tool for individualized art therapy. The samples classifications are not distinguished automatically. There is a priori knowledge of the patients under investigation and the results are classified manually in healthy and control groups and imported separately in the program for further statistical analysis and coherence calculation.

Software validation

NASESE has been validated and verified according to a published ASD clinical dataset, concerning cerebral functioning evaluation according to EEG signals [40]. The quantitative EEG analysis described in the study of reference, was performed between 2 groups of children, 21 with ASD and 21 without ASD and differences in the cerebral functioning were reported in the intrahemispheric and interhemispheric coherences [40]. Simulation data [41] was generated and processed according to the specific study in order to ensure the efficacy of tests and results between the non-ASD and ASD groups. However, there were significant differences observed within the groups between the different brain regions and in the delta and theta power at the frontal region in children with ASD and in the alpha and beta power in the frontal, central, and posterior regions in children with ASD, similar to the reference study [40].

Brain visualization module

The examination of the cerebrum's regions can be displayed in a rotating 3D model (Figure 4). The model has been created in the Blender environment [42]. It can be rotated in all possible directions by selecting the relevant arrow buttons (up, down, left, right). The model is divided into eight brain regions, and each region corresponds to the specific electrodes: left frontal region (Fp1 - F3 - F7), right frontal (Fp2 - F4 - F8), left central/parietal (C3, P3), right central/parietal (C4 - P4), left temporal (T3 - T5), right temporal (T4 - T6), left occipital (O1), and right occipital (O2). Within the brain regions, a lighting object is displayed, which is related to the underlying frequencies. The object changes color depending on the EEG inputs and the selected frequencies thresholds for each channel. Each region is colored green in normal measurements and red in abnormal measurements, while gray is the default color. An additional button for real - time threshold adjustment and observation is included. The threshold button opens the window 'Threshold Controller' (Figure 5), presenting an array with re-writable text fields for threshold handling. The array includes the name of each electrode/channel and the threshold bounds. Two threshold values refer to each channel; a low and a high one. If the values of the corresponding electrode are below the high threshold and higher than the low threshold, then the threshold values appear in green. If the electrode's value is lower than the low threshold, then the threshold value turns red. Also, if a higher value is observed then the high threshold turns red. If low threshold is higher than the high threshold then the label "invalid values" appears. In this array, a combination of electrodes is used for certain brain regions. Every second, there is a check of 250 samples for each channel. If at least one sample/value is out of bounds then the corresponding threshold turns red. For example, the left frontal area consists of Fp1, F3, and F7 electrodes. Each time the invalid values exist in at least one of the thresholds of each electrode, the corresponding lighter turns red in the 3D brain model. If the imported values of all electrodes in a certain region are normal according to the selected threshold, the lighter turns green. Apart from the threshold controller window, there is a second window showing for each channel, the time during the EEG recording that the abnormal values have been identified by the program (Figure 6).

Spectrogram module

The spectrograms of each electrode are also displayed in real time. Multiple buttons for delta, theta, alpha, beta, gamma and mu bands exist in this module. By selecting one of these buttons, the signals are filtered and displayed in the spectrograms in the selected corresponding band (Figures 7-12). For the mu rhythm, unipolar measurements are applied and the user can use both Cz or A1/A2 electrodes as the system reference electrodes. If the user selects the option “All”, no specific band is shown (Figure 13). Moreover, in the spectrogram module, a timer can be found below the start. When a file is imported, an external button with the label "OPEN COHERENCE" appears, displaying all the possible coherences between the electrode pairs (Figure 14). The Welch method [43-45] was used for the calculation of auto spectral and crossspectral density for each pair of electrodes. The Welch method includes cutting a signal into segments using a Hamming window. Then with the application of Fourier series, the auto spectral density and crossspectral can be calculated, which are the fundamental elements of the coherence formula as described below in the 'Statistics' module.

Colors module

A digital painting environment oriented for patients with cognitive impairment is designed, where options and buttons are distinct and can be easily memorized by the patients. The patient uses this multipreference environment in a second computer screen according to the therapist's protocol, while at the same time; the clinician evaluates the procedure through the EEG data visualization. Several options are offered to the users, and the therapist or the clinician can save the artworks and the EEG results for future use (Figure 15).

Sound module

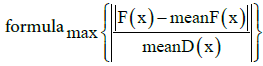

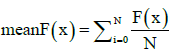

Sound console involves the transformation of EEG signals into sound (Figure 16). The sound module uses frequencies from the eight selected brain regions (LT Frontal, RT Frontal, LT Parietal/Central, RT Parietal/Central, LT Temporal, RT Temporal, LT Occipital, RT Occipital). For each channel the selected notes are generated using the  , where F(x) is the Fourier Spectrum for the current sample and

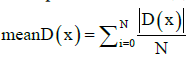

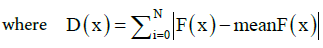

, where F(x) is the Fourier Spectrum for the current sample and  . The meanF(x) is the mean Fourier Spectrum of the samples. The meanD(x) function is the mean normalized distance,

. The meanF(x) is the mean Fourier Spectrum of the samples. The meanD(x) function is the mean normalized distance,  ,

,  This formula uses normalized values and returns the most intense change in the spectrum.

This formula uses normalized values and returns the most intense change in the spectrum.

Figure 16: Sound module includes SPEED bar for the speed that notes shall play. Mean bar for handling multiple notes as mean. Three buttons for saving in multiple types of files. Midi file can be extracted as music sheet from other capable programs for composition or improvisation based on EEG signals. There is also a START SOUND button for enabling the console. There are modifications for the octaves and the volume of each brain region.

Finally, for each electrode, the frequency with the highest normalized alternation is chosen. Channels can be set on or off. Volume and octave configuration are both adjustable for each electrode individually, and the generated sound sculptures can be exported and converted into mp3 or midi format. Furthermore, speed, mean configurations for choosing notes and volume handler options are available to the user.

Speed handles the time between the notes. For example, reducing the speed bar increases the number of notes to be played per second. Mean option chooses values from within the selected notes and creates a new note which is the average of their frequencies. It is obvious that the generation of sounds as a direct biofeedback from the brain signals is a very effective way for the therapists and clinicians to understand realtime temporal patterns related to behavior and cognitive abnormal conditions (Audio 1) [46], but it can be used for individualized sound therapy also (Audio 2).

Audio 1: A random music sculpture generated in real time from EEG sampling.

Audio 2: It is a processed version of Audio 1, under the application of certain music rules for potential sound treatment.

Statistics module

Recordings that have been previously saved can be tested and statistically evaluated, and the results for significant differences in the EEG waves are displayed. The results are expressed as the mean absolute power in the brain regions and coherence between the pairs of electrodes. Initially, a normality W/S test is used to check the distribution of mean absolute data. In normal distribution, the conduction of t-test for successful handling of the data is required otherwise u-test is chosen (Figure 17) and the p-values for all the corresponding wave bands are calculated. For the coherence the chi square test is used to examine significant differences in brain regions.

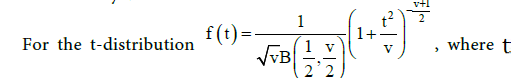

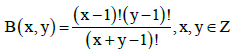

In order to calculate the p-values in adjustable threshold and reduced even to the lately proposed 0.005 (Benjamin, 2017), the following automated formulas have been coded instead of the manual application of the commonly used t-table and z-table:

= t value, =degrees of freedom and

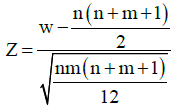

= t value, =degrees of freedom and and for the u-test the Mann-Whitney test that uses a normal approximation method to determine the p-value of the test in the form

and for the u-test the Mann-Whitney test that uses a normal approximation method to determine the p-value of the test in the form  , where W is the Mann-Whitney test statistics and n,m are the size of sample1 and sample2 (group of patient). For the coherence calculation, the pairs of electrodes (Fp1 - Fp2), (F7 - F8), (F3 - F4), (T3 - T4), (T5 - T6), (C3 - C4), (P3 - P4), (O1 - O2), (Fp1- F3), (Fp2 - F4), (T3 - T5), (T4 - T6), (C3 - P3), (C4 - P5), are selected to be displayed. For the coherence estimation, the Welch method is used, as mentioned before, according to the following formula [43-45]

, where W is the Mann-Whitney test statistics and n,m are the size of sample1 and sample2 (group of patient). For the coherence calculation, the pairs of electrodes (Fp1 - Fp2), (F7 - F8), (F3 - F4), (T3 - T4), (T5 - T6), (C3 - C4), (P3 - P4), (O1 - O2), (Fp1- F3), (Fp2 - F4), (T3 - T5), (T4 - T6), (C3 - P3), (C4 - P5), are selected to be displayed. For the coherence estimation, the Welch method is used, as mentioned before, according to the following formula [43-45]

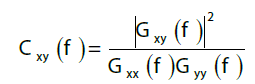

Where  is the cross spectral density of x and y,

is the cross spectral density of x and y, is the auto spectral density of x and

is the auto spectral density of x and  is the auto spectral density of y. Welch method compares the two signals and analyzes the coherence. There are three stages. Firstly, each signal is cut into fragments according to the most common overlapping 50%, and each fragment is multiplied with a Hamming window. Then, the power spectrum of all segments is calculated. The last step is to take an average of the power spectrums. The results are

is the auto spectral density of y. Welch method compares the two signals and analyzes the coherence. There are three stages. Firstly, each signal is cut into fragments according to the most common overlapping 50%, and each fragment is multiplied with a Hamming window. Then, the power spectrum of all segments is calculated. The last step is to take an average of the power spectrums. The results are  .Then spectral densities are used in the formula of coherence for the corresponding pair. For the verification of the software, simulation data was created and significant differences in mean absolute power were observed in delta band in LT frontal region with p-value 0.01 and RT frontal region with a p-value of 0.01, while in RT central region in beta band, significant differences were found with a p-value of 0.05, similar to the reference study [47], among other significant differences due to the simulation values, that there were not observed initially.

.Then spectral densities are used in the formula of coherence for the corresponding pair. For the verification of the software, simulation data was created and significant differences in mean absolute power were observed in delta band in LT frontal region with p-value 0.01 and RT frontal region with a p-value of 0.01, while in RT central region in beta band, significant differences were found with a p-value of 0.05, similar to the reference study [47], among other significant differences due to the simulation values, that there were not observed initially.

We can classify computerized cognitive-behavior therapy in the categories of software focusing on the process of brain recovery through aesthetics art projects and multisensory enriched environments, where their efficacy is examined via comparing pre and post art-making EEG data [30] and in more sophisticated Brain- Computer Interaction (BCI) neuroinformatics applications [48,49]. Nowadays researchers have moved a step further in the art therapy research by using the non-invasive sonogenetics method, where low-pressure ultrasound is applied for the activation of neurons even in the deeper brain regions [50]. In another latest research, the 'encephalophone' mu-device is presented for the creation of scalar music in real-time using EEG signals [51], taking advantage of the potential benefits that scalar musical tones and auditory stimulus offer for training including therapeutics procedures in comparison to visual stimulus [52]. The NASESE software presented in this technical report, is not just a simple automated platform for EEG acquisition, but an application for neurological evaluation of multisensory enriched environments in real time. It includes realtime processing and visualization of brain wavelets and offers interaction with the patient for the generation of music scalar notes of the same frequency with the tested brain. Much important functionality are offered while, the generated data can be storage in a way that any modification in the number of channels can occur in the future. Moreover, the user can select certain brain areas involved in specific functional processes to be audio represented [46], by taking into account that audio representations can reveal the fluctuations of the EEG signals that are correlated to certain brain functionalities and changes in behavior and cognitive ability, in a more distinct way than the visual representations [46,53,54]. The 'Statistics' module of the software is programmed to identify and filter data from different groups of patients and while the user can select the preferable frequency threshold, it can be effectively used for CNS disorders such as PD, AD or ASD. The 'Color' module can be used for testing the improvement of AD patients by displaying colors that may enforce or improve patients' memory [55]. By taking into consideration the decreased visibility of spectral illusory colors in AD patients and the advantages of long-term light therapies in dementia patients in rest/ activity rhythms and sleep efficiency, future versions of the software will include more specialized audio and visual enriched environments for patients, therapists and neurologists [56]. Especially for the audio representations, the users will be able to create melodies, based on the notes sequences and the extracted frequencies will also be used as motifs for advanced searching of orchestral music with a high number of similar notes.

AA, AP, GA study concept and design, analysis and interpretation of data, study supervision, preparation of the final manuscript, critical revision of manuscript for intellectual content. CS study design, analysis and interpretation of data, acquisition of data, coding supervision, coding, and preparation of the final manuscript. TV coding the first version of the music module. MV coding the first version of the colors module. SP study concept, artistic counseling and designing of the upcoming validation procedure.

The authors declare that this software was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

AA gratefully acknowledges the facilities provided by the AFNP Med (Engineering, Chemicals and Consumables GmbH), Austria. GA gratefully acknowledges the facilities provided by King Fahd Medical Research Center (KFMRC) and Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, Saudi Arabia.

The publication cost and the hardware purchase have been supported by the AFnP Med (Engineering, Chemicals and Consumables GmbH), Austria.